Today, I would like to share my note about the Ceph concept, deployment and configuration. Before we start, I have to say here first, this post includes my personal opinion for Ceph, it might be not fair or correct for everyone, but welcome to share your opinion with me. Moreover, since the material was what's I got from last year May, I am not sure everyone can practice in real exactly, but as long you have Ceph installation binary, I think you still can follow the steps as below to play around it all the scenario mostly.

First of all, What's Ceph ?

For me, Ceph is a free software storage platform designed to present object, block, and file system with storage from a single distributed computer cluster.High Level Diagram with Key Elements:

I'm sorry if repeatable boring you. This is my personal learning style, I like to repeat sometimes since it helps me to remember if I recap sometimes during the reading.

RADOS (Reliable, Automatic, Distributed Object Store) is the key element for Ceph and it's include OSD and MON these two basic components.

OSD includes features as below:

- OSD -> FS(btrfs, xfs, ext4) -> Disk

- one per disk

- serve stored "object" to clients

- Intelligently peer for replication & recovery

MON: is Ceph Monitor or Monitor OSD Node, you can see the "M" block from above diagram. It is the brain cells of the cluster for adding OSDs, detect failures and initiate Data Reconstruct. It also supports consensus for distributed decision making.

I summarized the MON features as below as well :

- maintain cluster

- Self-Healing : MON initiate recovery

- Monitors detect dead OSD

- Monitors allocate other OSDs via update CRUSH map

- allow future write to the new replica

- this relocation bases on Placement Group (PG)

- decision-making

- small - odd number

- check above diagram, there has 3 "M" .

- doesn't serve stored object

Hint: Ceph Architecture Relationship between each key components

Application ---------------------------------> librados <-> socket <-> RADOS Cluster (OSD+MON)Application <-> REST(internet) <-> radosgw <-> librados <-> socket <-> RADOS Cluster (OSD+MON)

Button to Top

- RADOS (Reliable, Automatic, Distributed Object Store) from CRUSH(Control Replication Under Scalable Hashing) includes

- OSD: object storage device (daemon) + MON: Monitors

- LIBRADOS: A library allowing apps to directly access RADOS (C, C++, Java, Python, Ruby, PHP)

- RGW(RADOS Gateway), it's a webservice gateway for S3 and SWIFT, the detail you can find in below section.

- RBD ( RADOS Block Device ) provide block device features and the detail you can find in (Working with RADOS Block Devices) Section.

- CephFS is POSIX-compliant FileSystem that uses a Ceph Storage Cluster as Ceph Block Devices, Ceph Object Storage with S3 and Swift API, or librados binder to store the data. The detail you can find in (CephFS), (In deep CephFS mount) and following sections.

CRUSH: Controlled Replication User Scalable Hashing

crush include features as below.- a pseudo-random data distribution algorithm, that efficiently and robustly distributes object replicas across a heterogeneous, structured storage cluster

- quick calculate; no look up

- avoid go through a centralized server or broker

- repeatable, deterministic

- Statistically uniform distribution

- Stable Mapping

- limited data migration on changes

- Rule-Base configuration

- Infrastructure topology aware

- Adjustable replication

- Weighting

Lab Sepc.

First of all, set up the environment. Here I would like to share my Lab Spec, it's a CentOS VM at VirtualBox: (PS: I was using the VM provide by instructor which includes install binary on ova (Virtual Appliance))- 1 CPU

- 384G RAM

- 4 SATA Ports

- 0 : 80G

- 1 : 10G

- 2 : 10G

- 3 : 10G

- 3 NIC Cards

- 1 : NAT, Port Forwarding : Name xxx , Protocol: TCP, Host Port: 22114, Guest Port:22

- 2 : Host-only Adapter

- 3 : Host-only Adapter

- Attach a local "share" if your VM has security constraint for allowing wget the osdonly.txt or s3curl.pl.

Outline for Ceph Deployment

- Preparation

- Port Forwarding or HostOnly Adapter to connect your VM via SSH

- Add 3 extra SATA Drives or Add 2 extra VM as MON ( option )

- Update VirtualBox iso

- Grant user authorization

- Create/Copy SSH key for extra 2 VMs connection ( option )

- Installation

- Install "ceph-deploy"

- Install release key - release.asc

- Add release package - eg: dumpling, cuttlefish

- Update repository and install ceph-deploy

- Install ceph on host

- Install ceph (dumpling) via ceph-deploy

- ceph.conf creation

- add ip with hostname in /etc/hosts

- setup MON

- create ceph monitor keyring (ceph.mon.keyring)

- setup OSD

- get bootstraps keys generated by the MON

- double check disk presenting

- deploy OSD

Pre-Install Preparation:

PS: before we start since we setup local host port forwarding, you can actually access via ssh with forwarding ports, just remember you install ssh before you try to use putty/pietty to access the VM.

update VBoxLinuxAdditions.run via VirtualBox iso, you might try the command as below.

- #sudo apt-get install virtualbox-guest-additions-iso

2. update user authorization via usermod -a -G

- #sudo usermod -a -G vboxsf ceph

- Review File System via #df

3. Since VirtualBox use port forwarding, I can allow to login via ssh

try use putty or petty ssh tool connect to:

localhost

22114

4. Create / Copy SSH key to local or other VMs(MON) or for later OSD deployment.

- #ssh-keygen

- #ssh-copy-id <hostname>

- #eval $(ssh-agent)

- #ssh-add

Install Ceph

Ceph install and create cluster pretty much rely on "ceph-deploy". If you like you can create an working area for deploy ceph call "ceph-deploy".

- #sudo apt-get update

- #mkdir $HOME/ceph-deploy

- #cd $HOME/ceph-deploy

Install Release Key

Packages are cryptographically signed with the "release.asc" key. Add our release key to your system's list of trusted keys to avoid a security warning.

#wget -q -O- https://raw.github.com/ceph/ceph/master/keys/release.asc | sudo apt-key add -

Add release package

Add the Ceph packages to your repository. Replace {ceph-stable-release} with a stable Ceph release (e.g., cuttlefish, dumpling, emperor, firefly, etc.). Such as, echo deb http://ceph.com/debian-{ceph-stable-release}/ $(lsb_release -sc) main | sudo tee /etc/apt/sources.list.d/ceph.list

eg:

#echo deb http://ceph.com/debian-dumpling/ $(lsb_release -sc) main | sudo tee /etc/apt/sources.list.d/ceph.list

eg:

#echo deb http://ceph.com/debian-dumpling/ $(lsb_release -sc) main | sudo tee /etc/apt/sources.list.d/ceph.list

Update your repository and install ceph-deploy:

sudo apt-get update && sudo apt-get install ceph-deploy

ceph-deploy -v install –-stable {ceph-stable-release} {hostname}

eg:

#sudo ceph-deploy -v install –-stable dumpling ceph-vm12

Install Ceph specific version on specific host

The command is pretty much like this;ceph-deploy -v install –-stable {ceph-stable-release} {hostname}

eg:

#sudo ceph-deploy -v install –-stable dumpling ceph-vm12

Setup

Create Cluster:

Purpose:Build just 1 MON with 3 OSD, all cluster nodes are running OSDs and MONs

Cluster in ceph is such as pool or group OSD and MON together. It rely on ceph.conf as configuration. So first of all, you need to set create ceph.conf via ceph-dploy, such as ceph-deploy -v new {hostname}eg:

#sudo ceph-deploy -v new ceph-vm12

PS:

if there has error said - unable to resolve host

That the /etc/hostname file contains just the name of the machine.

That /etc/hosts has an entry for localhost. It should have something like:

127.0.0.1 localhost.localdomain localhost

127.0.1.1 my-machine

2.0.0.15 ceph-vm12

then cat ceph.conf to check ceph.conf

here is the sample

-------------------

[osd]

osd journal size = 1000

filestore xattr use omap = true

# Execute $ hostname to retrieve the name of your host,

# and replace {hostname} with the name of your host.

# For the monitor, replace {ip-address} with the IP

# address of your host.

[mon.a]

host = {ceph-vm12}

mon addr = {10.0.2.15}:6789

[osd.0]

host = {ceph-vm12}

[osd.1]

host = {ceph-vm12}

[mds.a]

host = {ceph-vm12}

-------------------

Deploying MON (Monitors)

ceph.mon.keyring

Now we need to create ceph monitor keyring (ceph.mon.keyring) to deploy monitors

#ceph-deploy -v mon create ceph-vm12

check ceph monitor whether the monitor is in quorum or not.

#sudo ceph -s or sudo ceph health

as result we can see the MON is running but with error since we haven't set up OSD yet.

PS: The quorum disk and fencing on a Linux high availability cluster let its nodes know when they are operational and shut them off if they are not.

Deploying OSD - get bootstraps keys generated by monitors.

before deploying OSDs, we need to get the bootstraps keys generated by the monitors.- #ceph-deploy -v gatherkeys ceph-vm12

- #ceph-deploy disk list ceph-vm12

we can look at which disks are present in a machine and partitioned with

#ceph-deploy disk list ceph-vm12

ceph@ceph-vm12:~$ ceph-deploy disk list ceph-vm12

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.21): /usr/bin/ceph-deploy disk list ceph-vm12

[ceph-vm12][DEBUG ] connection detected need for sudo

[ceph-vm12][DEBUG ] connected to host: ceph-vm12

[ceph-vm12][DEBUG ] detect platform information from remote host

[ceph-vm12][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 12.04 precise

[ceph_deploy.osd][DEBUG ] Listing disks on ceph-vm12...

[ceph-vm12][DEBUG ] find the location of an executable

[ceph-vm12][INFO ] Running command: sudo /usr/sbin/ceph-disk list

[ceph-vm12][DEBUG ] /dev/sda :

[ceph-vm12][DEBUG ] /dev/sda1 other, ext4, mounted on /

[ceph-vm12][DEBUG ] /dev/sda2 other

[ceph-vm12][DEBUG ] /dev/sda5 swap, swap

[ceph-vm12][DEBUG ] /dev/sdb other, unknown

[ceph-vm12][DEBUG ] /dev/sdc other, unknown

[ceph-vm12][DEBUG ] /dev/sdd other, unknown

[ceph-vm12][DEBUG ] /dev/sr0 other, iso9660, mounted on /mnt/cdrom

***deploy 3 OSD on 1 machine (ceph-vm12)

#ceph-deploy -v osd create ceph-vm12:sdb ceph-vm12:sdc ceph-vm12:sdd

THIS IS OUR CASE

***deploy 3 OSD on 3 machine (ceph-vm12-1, ceph-vm12-2, ceph-vm12-3)

#ceph-deploy -v osd create ceph-vm12-1:sdb ceph-vm12-2:sdb ceph-vm12-3:sdb

***deploy 1 OSD on 1 machine (ceph-vm12-1, ceph-vm12-2, ceph-vm12-3)

#ceph-deploy -v osd create ceph-vm12-1:sdb ceph-vm12-2:sdc ceph-vm12-3:sdd

Try Deploy 3 OSDs

- #sudo ceph-deploy -v osd create ceph-vm12:sdb ceph-vm12:sdc ceph-vm12:sdd

- #sudo ceph -s

- check osd again and can see 3 osd there.

ceph@ceph-vm12:~$ ceph-deploy disk list ceph-vm12

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.21): /usr/bin/ceph-deploy disk list ceph-vm12

[ceph-vm12][DEBUG ] connection detected need for sudo

[ceph-vm12][DEBUG ] connected to host: ceph-vm12

[ceph-vm12][DEBUG ] detect platform information from remote host

[ceph-vm12][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 12.04 precise

[ceph_deploy.osd][DEBUG ] Listing disks on ceph-vm12...

[ceph-vm12][DEBUG ] find the location of an executable

[ceph-vm12][INFO ] Running command: sudo /usr/sbin/ceph-disk list

[ceph-vm12][DEBUG ] /dev/sda :

[ceph-vm12][DEBUG ] /dev/sda1 other, ext4, mounted on /

[ceph-vm12][DEBUG ] /dev/sda2 other

[ceph-vm12][DEBUG ] /dev/sda5 swap, swap

[ceph-vm12][DEBUG ] /dev/sdb :

[ceph-vm12][DEBUG ] /dev/sdb1 ceph data, active, cluster ceph, osd.0, journal /dev/sdb2

[ceph-vm12][DEBUG ] /dev/sdb2 ceph journal, for /dev/sdb1

[ceph-vm12][DEBUG ] /dev/sdc :

[ceph-vm12][DEBUG ] /dev/sdc1 ceph data, active, cluster ceph, osd.1, journal /dev/sdc2

[ceph-vm12][DEBUG ] /dev/sdc2 ceph journal, for /dev/sdc1

[ceph-vm12][DEBUG ] /dev/sdd :

[ceph-vm12][DEBUG ] /dev/sdd1 ceph data, active, cluster ceph, osd.2, journal /dev/sdd2

[ceph-vm12][DEBUG ] /dev/sdd2 ceph journal, for /dev/sdd1

[ceph-vm12][DEBUG ] /dev/sr0 other, iso9660, mounted on /mnt/cdrom

Update Default CRUSH Map

By default, "each copy is to be located on a different host", like I mentioned in the beginning section. OSD is ...- OSD -> FS(btrfs, xfs, ext4) -> Disk

- One Per Disk

download osdonly.txt from (https://drive.google.com/folderview?id=0ByCF430gPoVcZVR3VEpaVEtKMnc&usp=sharing)

put it in "share" , the use the copy command as below.

- #cp /media/sf_Share/osdonly.txt ./ -f

or you can download directly from my dropbox share to your working directory.

- #wget https://dl.dropboxusercontent.com/u/44260569/osdonly.txt

- #crushtool –c $HOME/ceph-deploy/osdonly.txt –o ./cm_new

- #sudo ceph osd setcrushmap –i ./cm_new

...wait 30 second...

- #sudo ceph -s

you will see the different from HEALTH_WARN to HEALTH_OK

Before:

ceph@ceph-vm12:~$ sudo ceph -s

cluster 1fb9e866-727c-4ffe-a502-eebc712592b4

health HEALTH_WARN 192 pgs degraded; 192 pgs stuck unclean

monmap e1: 1 mons at {ceph-vm12=10.0.2.15:6789/0}, election epoch 2, quorum 0 ceph-vm12

osdmap e16: 3 osds: 3 up, 3 in

pgmap v28: 192 pgs: 192 active+degraded; 0 bytes data, 100 MB used, 15226 MB / 15326 MB avail

mdsmap e1: 0/0/1 up

After:

ceph@ceph-vm12:~$ sudo ceph -s

cluster 1fb9e866-727c-4ffe-a502-eebc712592b4

health HEALTH_OK

monmap e1: 1 mons at {ceph-vm12=10.0.2.15:6789/0}, election epoch 2, quorum 0 ceph-vm12

osdmap e16: 3 osds: 3 up, 3 in

pgmap v30: 192 pgs: 192 active+clean; 0 bytes data, 102 MB used, 15224 MB / 15326 MB avail

mdsmap e1: 0/0/1 up

In sum, at this point, the Ceph cluster is

- In good health

- The MON election epoch is 2

- We have MONs in quorum 0 ( usually there has multiple, 0, 1, 2 ... etc , we only have 1 is because we are using single host. )

- The OSD map epoch is 16 (osdmap e16: 3 osd: 3 up, 3 in)

- We have 3 OSDs ( 3 up and 3 in )

- They are all up and in.

Maintenance Examples

Ceph Node will run a certain number of daemon interact withCeph MON ( monitor daemon )

- #sudo [stop|start|restart] ceph-mon-all

or check eg: ps –ef | grep ceph-mon

- #sudo [stop|start|restart] ceph-mon id=<hostname>

Ceph OSDs ( Object Storage Daemon )

- #sudo [stop|start|restart] ceph-osd-all

or check eg: ps –ef | grep ceph-osd

- #sudo [stop|start|restart] ceph-osd id=<n> eg: sudo stop ceph-osd id=0

Double Check Overall Health

- #sudo ceph -s

Double check OSD Tree

- #sudo ceph osd tree

All Ceph Daemon

- #sudo [stop|start|restart] ceph-all

Checking the installed Ceph version on a host

- #sudo ceph -v

Time Skew Note

***time is very important for Ceph Cluster***The Ceph cluster may report there is a clock skew on some of the monitors. In order to fix this specific problem, follow the following steps.

ssh to each MON ( host ) to check the time

local

#date

remote

#ssh ceph@ceph-vm12 date

stop ntp service

#sudo service ntp stop

syn ntp

#sudo ntpdate time.apple.com ( or other public server )

after check ntp time, restart MON

#sudo restart ceph-mon-all

recommand:

update the /etc/ceph/ceph.conf file on each of your VMs to allow a greater time skew between monitors. By default, the time skew allow is 0.05 second.

on MON's /etc/ceph/ceph.conf

[mon]

mon_clock_drift_allowed = 1

mon_clodk_drift_warn_backoff = 30

PS extra ntp information can be found here https://help.ubuntu.com/14.04/serverguide/NTP.html and in my demo since I'm using one MON, so I won't have ntp drifting or skewing issue.

Using RADOS

create 10 MB file under /tmp/test via DD

- #sudo dd if=/dev/zero of=/tmp/test bs=10M count=1

- output

- 1+0 record in

- 1+0 records out

- 10485760 bytes (10MB) copied

RADOS put

- #sudo rados -p data put test /tmp/test

RADOS display diff

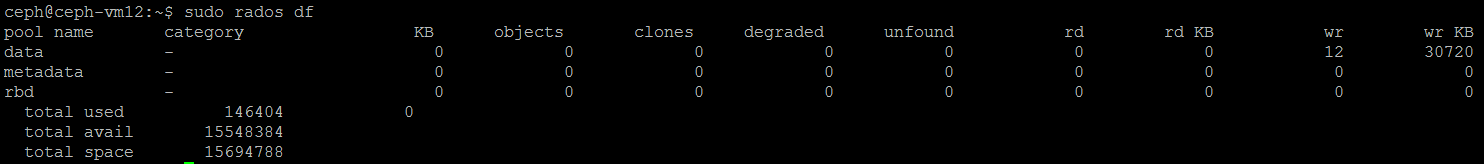

- #sudo rados df

Demo: add 3 10MB files, call test, test1 and test 2 and remove it to see what's happen from rados df

- #sudo rados -p data put test /tmp/test

- #sudo rados df

- #sudo rados -p data put test1 /tmp/test

- #sudo rados df

- #sudo rados -p data put test2 /tmp/test

- #sudo rados df

Clean up

CephX - Ceph's "internal authentication mechanism".

CephX uses for Ceph Daemons to authenticate before talking to each other. Client must identify themselves before they can use Ceph.

Here is an example assume that the Keyring for the client.admin user is in:

To use Ceph's watch mode with CephX enabled like this:

Here is an example assume that the Keyring for the client.admin user is in:

To use Ceph's watch mode with CephX enabled like this:

- #sudo ceph --id admin --keyring /etc/ceph/ceph.client.admin.keyring -w

To enable an RBD image with CephX enable like this:

- #sudo rbb --id admin --keyring /etc/ceph/ceph.client.admin.keyring -w

Or create an RBD image 1024 test with CephX enable like this:

- #sudo rbb --id admin --keyring /etc/ceph/ceph.client.admin.keyring create --size 1024 test

Defining which cephx key to use

By default, every ceph command will try to authenticate with the admin client. To avoid the need to specify a client and its associated keyring file in the CLI, you can use the CEPH_ARGS variable.

eg:

- CEPH_ARGS='--id rbd.alice --keyring /etc/ceph/ceph.client.rbd.alice.keyring'

add a user to Ceph

- #sudo ceph --id admin --keyring /etc/ceph/ceph.client.admin.keyring auth get-or-create client.test mds 'allow' osd 'allow *' mon 'allow *' -o /etc/ceph/keyring.test

Editing Permission

check current permission- #sudo ceph auth list

you can see the client.test we just created with the permissions we just assigned.

If you would like to change permission, you can either update via above CLI or add cap line directly in the keyring file such as ( /etc/ceph/keyring.test ).

Working with RADOS pools -- picture it's similar with SWIFT "container" - folder

Getting information on pools

list currently existing pools

- #sudo rados lspools

list of all available pools along with their internal number

- #sudo ceph osd lspools

list all pools and get extra information (replication size, crush ruleset, etc.),

- #sudo ceph osd dump | grep pool

- #sudo rados df

or

- #sudo ceph df

Creating and Deleting pools

Before we start here, I have to introduce placement group.

pg is invisible for Ceph user: CRUSH algorithm determines in which placement group the object will be placed. Although CRUSH is a deterministic function using the object name as parameter, there is no way to force an object into a given placement group.

eg: if OSD #2 fail, another will be assigned to PG #1. if the pool size is changed from 2 to 3, an additional OSD will be assigned to the PG and will receive copies of all objects in the PG.

PG doesn't own OSD, it share with other PG from the same pool. if OSD #2 fail, the PG #2 will also have to restore copies of object OSD #3

PS: # of PG increase, the new PG will be assigned to OSDs. CRUSH will also change and some object from former PGs will be copied over to the new PGs and remove from old one.

How are placement groups used ? similar concept with journalism file system ( tracking changes )

A placement group (pg) aggregates objects within a pool because tracking "object placement" and "object metadata" on a per-object basis is computationally expensive. eg: a system with million of objects cannot realistically track placement on a per-object basis.pg is invisible for Ceph user: CRUSH algorithm determines in which placement group the object will be placed. Although CRUSH is a deterministic function using the object name as parameter, there is no way to force an object into a given placement group.

eg: if OSD #2 fail, another will be assigned to PG #1. if the pool size is changed from 2 to 3, an additional OSD will be assigned to the PG and will receive copies of all objects in the PG.

PG doesn't own OSD, it share with other PG from the same pool. if OSD #2 fail, the PG #2 will also have to restore copies of object OSD #3

PS: # of PG increase, the new PG will be assigned to OSDs. CRUSH will also change and some object from former PGs will be copied over to the new PGs and remove from old one.

create a pool - with 128 configured placement groups

- #sudo ceph osd pool create swimmingpool 128

rename a pool

- #sudo ceph osd pool rename swimmingpool whirlpool

***change size to 3 (Placement Group Number = 3)***

- #sudo ceph osd pool set whirlpool size 3

PS: REF info

Display human-readable pg map via dump.

- Less than 5 OSDs set pg_num to 128

- Between 5 and 10 OSDs set pg_num to 512

- Between 10 and 50 OSDs set pg_num to 4096

- If you have more than 50 OSDs, you need to understand the tradeoffs and how to calculate the pg_num value by yourself

- eg, for a cluster with 200 OSDs and a pool size of 3 replicas, you would estimate your number of PGs as follows:

(200 * 100)

----------- = 6667. Nearest power of 2: 8192

3

Display human-readable pg map via dump.

- #sudo ceph pg dump

- #sudo ceph pg map 4.75

osdmap e24 pg 4.75 (4.75) -> up [2,0] acting [2,0]

Use the command as below to locate where are the above OSDs running.

- #sudo ceph osd tree

create a file via dd

- #sudo dd if=/dev/zero of=/tmp/test bs=10M count=1

upload /tmp/test to whirlpool

- #sudo rados -p whirlpool put test /tmp/test

request stats

- #sudo rados -p whirlpool stat test

or display detail statistic

- #sudo rados df

delete the pool

- #sudo ceph osd pool delete whirlpool whirlpool --yes-i-really-really-mean-it

display detail statistic

- #sudo rados df

Working with RADOS Block Devices

Build three nodes, all cluster nodes are running OSDs and MONs, use this Ceph Cluster as target for rbd (RADOS Block Device) acess

RADOS Bock Device (RBD) ( create key for image --> create image --> map image with key to local --> Create File System and Mount )

Create Client Credential for the RBD Client under /etc/ceph/

- #sudo ceph auth get-or-create client.rbd.johnny osd 'allow rwx pool=rbd' mon 'allow r' -o /etc/ceph/ceph.client.rbd.johnny.keyring

Create RBD image - name: test

- #sudo rbd create test --size 128

Check the RBD image - name: test

- #sudo rbd info test

- #sudo modprobe rbd

Map image on local server

- #sudo rbd --id rbd.johnny --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring map test

List all mapped RBD image

- #sudo rbd --id rbd.johnny --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring showmapped

or if you are in johnny

- #sudo rbd --id rbd.johnny showmapped

- eg: device rbd0 as pool - name "rbd", similar with object example: swimmingpool, whirlpool

id pool image snap device

0 rbd test - /dev/rbd0

or

1 rbd test - /dev/rbd1

just check depends on which rbd drives was assigned.

or

1 rbd test - /dev/rbd1

just check depends on which rbd drives was assigned.

Formatting the rbd drive

- #sudo mkfs.ext4 /dev/rbd0

Create a folder for mount the rbd drive

- #sudo mkdir /mnt/rbd

Mount rbd drive

- #sudo mount /dev/rbd0 /mnt/rbd

Check mount File System

- #df or df -h

eg:

/dev/rbd0 124M 5.6M 113M 5% /mnt/rbd

Storing data in Ceph rbd

Create Test data with dd command

- #sudo dd if=/dev/zero of=/tmp/test bs=10M count=1

check before copy

- #df -h

eg:

/dev/rbd0 124M 5.6M 113M 5% /mnt/rbd

copy

- #sudo cp /tmp/test /mnt/rbd/test_cp

check after copy

- #df -h

eg:

/dev/rbd0 124M 16M 102M 14% /mnt/rbd

display detail statistic

- #sudo rados df

observe:

add 10MB, objects + 2

add 20MB, objects + 3

add 30MB, objects + 4

Use RADOS

upload as object - puta pseudo-random data distribution algorithm

- #sudo rbd --id rbd.johnny --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring -p rbd put test /tmp/test

try

- #sudo rados --id rbd.johnny -p rbd put test /tmp/test

- #sudo rados --id rbd.johnny -p rbd put test1 /tmp/test

Request the stats

- #sudo rados --id rbd.johnny -p rbd --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring stat test

or try

- #sudo rados --id rbd.johnny -p rbd stat test

Check Cephx in action

- #sudo rados --id rbd.johnny -p data --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring put test /tmp/test

- error putting data/test: Operation not permitted

- because the key we create is for RBD, not for RADOS, we can't use RADOS put we have to use RBD put.

Clean up ( umount dev/rbd0 --> remove data from default RADOS pool.

unoumt

- #cd $HOME

- #sudo umount /mnt/rbd

umap

- #sudo rbd --id rbd.johnny --keyring=/etc/ceph/ceph.client.rbd.johnny.keyring unmap /dev/rbd0

or

- #sudo rbd --id rbd.johnny umap /dev/rbd0 or rbd1 ... etc

- #sudo rados --id rbd.johnny -p rbd rm test

- #sudo rados --id rbd.johnny -p rbd rm test1

- #sudo rados --id rbd.johnny -p rbd rm test2

- ...

final double check

- #sudo rados --id rbd.johnny df

Working with the CRUSH map

We experience in the first osd setup via update the crush map via osdonly.txt. But now let's try more deeper to see how CRUSH can make magic happen.

CRUSH runs quick calculation and assign object location directory without lookup.

CRUSH runs quick calculation and assign object location directory without lookup.

CRUSH is Pseudo-Random Placement Algorithm, find the object "in the fly w/o metadata indexing". It is "repeatable" and "deterministic".

CRUSH includes Rule-Base Configuration such as infrastructure topology aware (region, site ... etc), adjustable replication (change replica policy) and Weighting ( prioritize base on weight for parameters).

It's great idea for me w/o indexing and if you are interest in the CURSH logic, here is the paper about CRUSH here: (http://docs.ceph.com/docs/master/rados/operations/crush-map/)

Create a customized CRUSH(Control Replication Under Scalable Hashing) map where contain SSD disks.

Strategy: every primary copy written to SSD and every replica written to platter.

Idea:

- Copy --> Primary Copy --> SSD

- Primary Copy --> Replica --> Platter

Get current CRUSH map

- #sudo ceph osd getcrushmap -o /tmp/cm

- Output:got crush map from osdmap epoch 27

- check CRUSH map - it's binary file

- #ls /tmp/cm

- or

- #cat /tmp/cm (it's a binary file)

decode CRUSH map binary file

- #crushtool -d /tmp/cm -o /tmp/cm.txt

- check decode result, if return code = 1, then its successful.

- #echo $?

- check decode CRUSH map file

- #cat /tmp/cm.txt

- #sudo ceph osd tree

Testing a rule

When you create a configuration file and deploy Ceph with ceph-deploy, Ceph generates a default CRUSH map for your configuration. The default CRUSH map is fine for your Ceph sandbox environment. However, when you deploy a large-scale data cluster, you should give significant consideration to developing a custom CRUSH map, because it will help you manage your Ceph cluster, improve performance and ensure data safety.

Compile the CRUSH map

- #crushtool -c /tmp/cm.txt -o /tmp/cm.new

- check compile result via return code 0

- #echo $?

crushtool allow to test crush ruleset

- #crushtool --test -i /tmp/cm.new --num-rep 3 --rule 2 --show-statistics

- output: eg: CRUSH rule 2 x 1023 [0,2,1] ,

- [x,y,z] - x is primary OSD for the copy, y is secondary OSD for second copy and z is the third OSD

Applying

Apply a crushmap

- #sudo ceph osd setcrushmap -i /tmp/cm.new

identify all OSDs

- #sudo ceph osd tree

Demo: Try SSD first rule to the pool

create a SSD pool

- #sudo ceph osd pool create mypool 128

set SSD pool crush rule set

- #sudo ceph osd pool set mypool crush_ruleset 2

- PS: watch out crush_ruleset; underscore.

Double Check Rule works or not

- #sudo ceph osd map mypool test

- output: --> up [x,y] acting [x,y]

- or

- #sudo ceph osd map mypool alpha

- output: --> up [y,z] acting [y,z]

PS: The objects test and alpha do not need to be present in the pool for the command to work because Ceph calculates the position of objects based on the "object name’s hash".

PS:Underlined are the IDs of the Placement Group the object will be assigned to if it were to be created with the exact same NAME you passed in as an argument to the tool.

eg: try test, test1, test2, alpha, beta, gamma, delta ... etc,

as long as the name is same the test result should be the same.

output:

CephFS

Configure MDS and mount Ceph File System, include snapshot.

Full CephFS mount. I Configure MDS (metadata daemon server) via ceph-deploy mostly. But I have to mention here, at the time when I post this article, CephFS hasn't formal announce in production yet.

- #cd $HOME/ceph-deploy

- #ceph-deploy mds create ceph-vm12 {hostname}

Display the content of the ceph admin keying

- #cat ceph.client.admin.keyring

copy keyring file content and will modify to fit CephFS needs.

- #cat ceph.client.admin.keyring > $HOME/asecret

modify

go to home dir

- #cd $HOME

modify and left key only

- #vim asecret

- keep only eg: AQBpzclUMLIVIRAAKMjxzDYw5WZorOJDrrHcHQ==

authroize ceph user group

- #sudo chown ceph $HOME/asecret

authorize 0644 mode

- #sudo chmod 0644 $HOME/asecret

Create a mount point and mount CephFS

go to home dir

- #cd $HOME

create directory under /mnt call cephfs as mount point

- #sudo mkdir /mnt/cephfs

mount with keyring cert

- #sudo mount -t ceph -o name=admin,secretfile=./asecret johnny:/ /mnt/cephfs

same

- #sudo mount -t ceph -o name=admin,secretfile=./asecret {hostname}:/ /mnt/cephfs

display

- #df

or

- #df -h

- #cd /mnt/cephfs

- #sudo mkdir dir1

- #sudo mkdir dir2

- #ls -algorithm

- #sudo chown ceph ./dir1

- #sudo chown ceph ./dir2

- #touch dir1/atestfile.txt

- PS:The touch command is the easiest way to create new, empty files.

- It is also used to change the timestamps (i.e., dates and times of the most recent access and modification) on existing files and directories.

- #ls -al

In depth CephFS mount

Create a new mount point( umount previous and mount to subfolder dir1 )

- #cd $HOME

- #sudo umount /mnt/cephfs/

- #sudo mount -t ceph -o name=admin,secretfile=./asecret johnny:/dir1 /mnt/cephfs

Check

- #df

- #cd /mnt/cephfs

- #ls -al

Working with CephFS Snapshots

create test file at existing directory via dd

- #dd if=/dev/zero of=./ddtest bs=1024 count=10

check

- #ls -al

or

- #ls -lrth

snapshot this directory

- #mkdir ./.snap/snap_now

display snapshot

- #ls ./.snap/snap_now

delete and restore

delete

- #rm ./ddtest

restore - cp from snapshot folder

- #cp ./.snap/snap_now/ddtest ./

unmount

- #cd $HOME

- #sudo umount /mnt/cephfs

RESTful APIs to Ceph using RADOSGW (RADOS Gateway)

After we proper install and configure RADOWSGW, we can make ceph interact with following CLI/API as interface.

- Swift command

- curl command

- s3cmd command

Install RADOSGW and Apache

- #sudo apt-get install apache2 radosgw libapache2-mod-fastcgi

Configure RADOSGW

- #sudo vim /etc/ceph/ceph.conf

- add following for radosgw:

[client.radosgw.johnny]

host = johnny

rgw socket path = /tmp/radosgw.sock

keyring = /etc/ceph/keyring.radosgw.johnny

As you can see what's we add, we need to create a keyring.radosg.johnny

- #sudo ceph auth get-or-create client.radosgw.johnny osd 'allow rwx' mon 'allow rw' -o /etc/ceph/keyring.radosgw.johnny

create /etc/apache2/sites-available/radosgw.vhost for radosgw.vhost configuration file

- #sudo vim /etc/apache2/sites-available/radosgw.vhost

- add following

<IfModule mod_fastcgi.c>

FastCgiExternalServer /var/www/radosgw.fcgi -socket /tmp/radosgw.sock

</IfModule>

FastCgiExternalServer /var/www/radosgw.fcgi -socket /tmp/radosgw.sock

</IfModule>

<VirtualHost *:80>

ServerName johnny (should be your server name)

ServerAlias johnny (should be your server name/alias)

ServerAdmin webmaster@example.com

DocumentRoot /var/www

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteRule ^/(.*) /radosgw.fcgi?%{QUERY_STRING [E=HTTP_AUTHORIZATION:%{HTTP:Authorization},L]

</IfModule>

<IfModule mod_fastcgi.c>

<Directory /var/www>

Options +ExecCGI

AllowOverride All

SetHandler fastcgi-script

Order allow,deny

Allow from all

AuthBasicAuthoritative Off

</Directory>

</IfModule>

AllowEncodedSlashes On

ServerSignature Off

</VirtualHost>

Add radosgw.fcgi script

- #sudo vim /var/www/radosgw.fcgi

- add following

#!/bin/sh

exec /usr/bin/radosgw -c /etc/ceph/ceph.conf -n client.radosgw.johnny

ps:client.radosgw.{hostname}

ps:client.radosgw.{hostname}

make executable

- #sudo chmod +x /var/www/radosgw.fcgi

Enable the apache site and restart apache:

- #sudo a2ensite radosgw.vhost

- #sudo a2dissite default

- #sudo a2enmod rewrite

- #sudo service apache2 restart

Create the required file for upstart to start the radosgw

- #sudo mkdir /var/lib/ceph/radosgw/ceph-radosgw.johnny

- #sudo touch /var/lib/ceph/radosgw/ceph-radosgw.johnny/done

Start services:

- #sudo start radosgw-all-starter

Create the default region map

- #sudo radosgw-admin regionmap update

Create and add a pool to the radosgw if it does not exist yet:

- #sudo ceph osd pool create .rgw.buckets 128

- #sudo radosgw-admin pool add --pool=.rgw.buckets

Update the default region map

- #sudo radosgw-admin regionmap update

Add User

1. create admin user

2. use admin user to create sub user.

add user to the radosgw

2. use admin user to create sub user.

add user to the radosgw

- #sudo radosgw-admin -n client.radosgw.johnny user create --uid=johnnywang --display-name="Johnny Wang" --email=johnny@example.com --access-key=12345 --secret=67890

- output

{ "user_id": "johnnywang",

"display_name": "Johnny Wang",

"email": "johnny@example.com",

"suspended": 0,

"max_buckets": 1000,

"auid": 0,

"subusers": [],

"keys": [

{ "user": "johnnywang",

"access_key": "12345",

"secret_key": "67890"}],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"placement_tags": []}

It's ready to access radosgw via the S3 API

Add sub user for johnnywang

- #sudo radosgw-admin subuser create --uid=johnnywang --subuser=johnnywang:swift --access=full

Then, create a key for the swift sub-user:

- #sudo radosgw-admin key create --subuser=johnnywang:swift --key-type=swift --secret=secretkey

Access RADOWSGW with Openstack Swift Command (swift)

Test with swift command ( python-swiftclient package )

- #sudo apt-get install swift

- #swift -V 1.0 -A http://johnny/auth -U johnnywang:swift -K secretkey post test

- #echo $?

0 - ok!

here is example:

This is just a demo for Ceph do connect Swfit, however the Ceph web site said Ceph still not recommend use it for Production.

here is example:

This is just a demo for Ceph do connect Swfit, however the Ceph web site said Ceph still not recommend use it for Production.

Test with create a new container

- #swift -V 1.0 -A http://{hostname}/auth -U johnnywang:swift -K secretkey list

output: test

The secret value to be used is the value previously shown on your screen when creating the key for the sub-user

Access the RadosGW Admin API (curl)

Create a RadosGW admin

A radosgw admin will have special privileges to access users, buckets and usage information through the RadosGW Admin API.

- #sudo radosgw-admin user create --uid=admin --display-name="Admin user" --caps="users=read, write; usage=read, write; buckets=read, write; zone=read, write"

- #sudo radosgw-admin key create --uid=admin --access-key=abcde --secret=qwerty

Access RADOWSGW API - curl

The admin API uses the same authentication scheme as the S3 API. To get access to data, you can use the S3curl CLI.

install libdigest-hmac-perl

install libdigest-hmac-perl

install curl

- #sudo apt-get install libdigest-hmac-perl curl

print info for admin

- #sudo radosgw-admin user info --uid=admin

- output as below, it's the admin we created before.

create s3curl file

- #sudo vim ~/.s3curl

- add following

%awsSecretAccessKeys = (

admin => {

id => 'abcde',

key => 'qwerty',

},

);

Change permission

- #sudo chmod 400 ~/.s3curl

modify s3curl.pl script

- download s3curl zip from https://aws.amazon.com/code/128

- unzip and move folder s3-curl to share ( the attached share folder we create )

- copy s3curl.pl

- #sudo cp /media/sf_Share/s3-curl/s3curl.pl ./

- modify the s3curl.pl script so that ‘johnny’ is included in @endpoints (line number 30).

- #sudo vim ./s3curl.pl

- PS you can try

- #wget https://dl.dropboxusercontent.com/u/44260569/s3curl.pl

eg: begin customizing here

my @endpoints = ( 'johnny',

's3.amazonaws.com',

's3-us-west-1.amazonaws.com',

's3-us-west-2.amazonaws.com',

's3-us-gov-west-1.amazonaws.com',

's3-eu-west-1.amazonaws.com',

's3-ap-southeast-1.amazonaws.com',

's3-ap-northeast-1.amazonaws.com',

's3-sa-east-1.amazonaws.com', );

- #sudo chmod +x ./s3curl.pl

list all the buckets of a user

- #./s3curl.pl --id=admin -- 'http://johnny/admin/bucket?uid=johnnywang'

Access RADOWSGW with S3 CLI (s3cmd)

download new vhost file- #wget https://dl.dropboxusercontent.com/u/44260569/vhost.apache.txt

update /etc/ceph/ceph.conf, add one line under [client.radowsgw.{hostname}]

rgw dns name = "rgw.local.lan"

restart apache

- #sudo service apache2 restart

terminate existing RADOS gateway

- #sudo killall radowsgw

install s3cmd

- #sudo apt-get isntall s3cmd

update /etc/hosts add line as below.

{ip} rgw.local.lan bucket1.rgw.local.lan bucket2.rgw.local.la

download s3cmd config

- #wget https://dl.dropboxusercontent.com/u/44260569/s3cmd.cfg

- #cp s3cmd.cfg ~/.s3cfg

try s3 command as below.

- #s3cmd ls

- #s3cmd mb s3://bucket1

- #s3cmd ls

Create test file

- #sudo dd if=/dev/zero of=/tmp/10MB.bin bs=1024k count=10

put file to S3 radows gateway via S3 command

- #s3cmd put --acl-public /tmp/10MB.bin s3://bucket1/10MB.bin

download file from S3 radows gateway via s3 command

- #wget -O /dev/null http://bucket1.rgw.local.lan/10MB.bin

---Relatives Quick Q&A---

Ceph and OpenStack 3 Major Integration

- Cinder Integration

- Glance Integration

- KeyStone Integration

Describe 3 ways to integrate Ceph/RBD? How does Ceph integrates with OpenStack?

- Kernel RBD: rbd block device managed at client kernel (e.g. /dev/rbd)

- libvirt : integrates Ceph RBD to "libvirt" via "librbd" for virtual machine to access Ceph block storage

- Rados Gateway: provides RESTful API to access Ceph Object Storage Cluster via Rados protocol

Open stack integrates with Ceph with:

- Swift: OpenStack client access to Ceph through "Swift API"

- Cinder: For VM to access block stooge managed on Ceph, enabled by "RBD driver to Cinder", set Ceph pool name to "nova.conf"

- Glance: for image to be stored as block storage on Ceph, configured "RBD pool" in “glance-api.conf “

Describe how data moves from inside VM to Disk with OpenStack/KVM and Ceph setup

- VM -> client OS -> /dev/vdb -> "libvirst" -> KVM/Qemu -> "librbd" -> Rados -> OSD -> XFS/EXT4 -> disk for journal and data.

What are the essential elements to design a High Available storage cluster? Is Ceph HA? and why?

- To prevent single point of failure by have redundant modules at each layer that communicates states between each other so that services can failover once at module failure.

- The logical elements involved in building HA storage cluster are:

- "heartbeat", monitoring, failover and failback, and data replication.

- Ceph is HA for: distributed design of Monitor, and OSD host, and data replication at OSD.

Thought:

I am not sure whether there has issue or refresh issue. I saw the number ( statistic ) updating delay pretty often. I guess when I write the primary copy happen right away but there might has some replica handle by daemo by cron scheduler.