Storage Location ( Physical )

But before we start to talk about ring in swift, I would like to talk about storage location first since swift is a software build on top of JBOD. So it is physical disk inside an account, container or object server. Where account databases, container databases, and object are stored.Zone

For isolated set of storage location. A "zone" could represent a single disk, server, cabinet switch, or even a physical part of the datacenter. If I could use the similar concept, I would say SAN's zoning is pretty much doing the same thing. But in swift, zone present a server "Rack" usually.PS: Swift system design REGION on top of zone in most of the case, which means each region can has multiple zones.

PS: It might be easier to picture,

zone = rack of servers/drives

region = "server room or data center"

However, this is just example and may be not the true always.

- Region > Zone

- Server Room or DC (Region) > Rack of Servers(Zone)

Sum

Storage Location: Region (DC) > Zone (Rack)The Ring ( Virtual )

Last, we would like to discuss how does swift place data ? It's all rely on "Ring".The Ring determines the storage locations of any given "account database", "container database", or "object". It's a "static" data structure, and locations are deterministically derived.

Where should this object be stored --> The Ring --> Store this object in these location --> disk 1 ... disk m ... disk n

Ring Partition

Concept: Ring > (1:N) > Ring Partition > (1:N) > "A set of Storage Locations" ~ "The Number of Desired Replicas"- A ring is segmented into a number of ring partitions.

- Each ring partition maps to "a set of storage locations".

- The number of storage locations per ring partition is determined by "the number of desired replicas".

PS: group of directories on disk.

Partition Power

Partition Power determines the total number of ring partitions in the form of 2^n. Generally speaking, larger clusters will require a larger partition power. The optimal partition power is derived from the max number of storage locations in the cluster.Logic : eg: 50 HDD

50 ( Max Number of Storage Locations = Disk ) * 100 ( Desired Partitions per Storage Location ) / 3 ( Replica Count ) = 1667 ( Target Partitions )

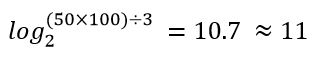

Log2 ( 1667 ) = 10.7 ( Binary Logarithm of Target PArtitions ) ~ 11 ( get integer : Partition Power )

The equation can be look like this:

PS: the binary logarithm of x is the power to which the number 2, must be raised to obtain the value x.

eg: log2 (1024) = 10 since 2 ^ 10 = 1024

eg: 1og2 (2048) = 11 since 2 ^ 11 = 2048

Here is an example to show you how's ring builder commands you can try when your max number of drives setup is 50 and min is 10. Except partition power you can see in commands is 11, there has max per drive is 614 which means if your min drive setup is 10, the max per drive might be 614, vise versa for min per drive is 123 when total drive number is 50.

Calculating Partition Power

Number of partitions / drive:

(2^[partition power] x [Replica Count]) / [Disks] ~ 100 partition/disk

#e.g - 1:

- Partition Power = 16

- Replicas = 3

- Disks = 2000

- Number of Partition/Drive : ( 2^16 * 3 ) / 2000 = 98.3

#e.g. - 2

- Partition Power = 16

- Replicas = 3

- Disks = 100 ( each disk has 100 partitions )

- Number of Partition/Drive : ( 2^16 * 3 ) / 100 = 1966

if we assume 3TB drives, the following table lists some partition power values and how they would translate to disk count, raw storage, and usable storage once the cluster cluster grown as large as it can ( ~ 100 partition per drive ).

Partition Power

|

Target Disk Count

|

Cluster Raw

(3 TB/disk)

|

Cluster Usable

(Raw / 3 replicas)

|

16

|

1966

|

5.90 PB

|

1.97 PB

|

17

|

3932

|

11.80 PB

|

3.93 PB

|

18

|

7864

|

23.59 PB

|

7.86 PB

|

19

|

15728

|

47.18 PB

|

15.73 PB

|

20

|

31457

|

94.37 PB

|

31.46 PB

|

21

|

62914

|

188.74 PB

|

62.91 PB

|

Object Hash

Object Hash is unique identifier for every object stored in the cluster. It is used to map objects to ring partitions.

We can use RESTful API - http put to store an object in swift. When we manipulate the object, the "PATH" is the key since the object path is extracted from the request, and the prefix and suffix salts are added. eg: PREFIX_SALT + '/Account/Container/Object' + SUFFIX_SALT. When the process completed, the MD5 is computed, and the object hash is returned. The hash is calculated from the object's "PATH", not the object's contents. The prefix and suffix salts are configured at the cluster level.

eg: MD5 hash of "johnny", and we would like to store it to object.

d0f59baadadd3349e4a9b2674bcceae8

Like we mentioned, object hash is a mapping between object and ring partitions. Here is an example is using partition power and physical MD5 of string to get your ring partition to map your object.

1. swift only uses the first 4 bytes of the hash.

d0 f5 9b aa da dd 33 49e 4a 9b 26 74 bc ce ae8

2. the first 4 bytes (32 bits) of the hash converted to binary. With a partition power of 11, the top 11 bits are significant.

a. convert each Hex to Binary

eg: d0 = 1101 0000

1101 0000 1111 0101 1001 1101 1010 1010

b. Since the previous setting we assume Max =50 drives and partition power = 11, we collect top 11 bits.

1101 0000 1111 0101 1001 1101 1010 1010

3. The value is bit shifted to the right 21 bits since total bits = 32, then 32 bits - 11 bits ( partition power ) = 21.

0000 0000 0000 0000 0000 0 1101 0000 111

4. Converting the binary value to decimal, we get ring partition 1575.

0000 0000 0000 0000 0000 0110 1000 0111

= 2^0*1 + 2^1*1 + 2^2*1 + 2^7*1 + 2^9*1 + 2^10*1

= 1575

Replicas

- For HA purpose, the replicas is required to copy of account databases, container databases, and objects to guaranteed it(replicas) to reside in a different zone.

PS: Commit the write should get 2 quorum acks always, it doesn't need to wait 3rd ack always.

Handoffs - how swift handle failure

- Handoff is standby storage locations, ready in the event a primary storage location is full or not available.

- It's a locations for any given partition will be primary storage location for other partition.

- Handoff is always waiting for failure primary down, then copy to handoff. And when primary back and copy from handoff again and clean handoff.

PS: ring includes 3 primary replica and plus 1 handoff

Swift Replicators

- It ensures data is present on all primary storage locations. If a primary storage location fails, data is replicated to handoff storage locations.

- Handoff storage locations participate in replication in a failure scenario.

- Replication is pushed based.

Swift Auditors

It identifies corrupted data, and moves it into quarantine. Uses MD5 hashes of the data, which are stored in the XFS xattrs of each files. Replicators restore a good copy from other primary nodes. Replication is pushed base.PS: XFS vs EXT4, XFS is the best for SWIFT now.

Sum

Cluster

- Region ( Data Center )

- Zone ( Rack )

- Node ( Server )

- Disk ( Drive )

Reference:

https://rackerlabs.github.io/swift-ppc/https://github.com/openstack/swift

No comments:

Post a Comment