This is the video demo about how to leverage VirtualBox VM with Kubernete/Docker Cluster as computing resource to process videos parallel ( basically ffmpeg ). The video repository are all in Object Storage Swift.

This is another demo to use GKE as Computing resource, the demo scenario are all the same but just show you how it is easy to leverage GKE and how it is easy to align your on-prem Object Storage.

This is another demo to use GKE as computing resource, the demo scenario are pretty much the same but it leverages Swift Object Notifier ( Swift Clawer ( for mySQL DB ) ) as a trigger for Kubernetes Jobs ( Pod )

This demo has similar scenario but leverage private docker hub and dynamic create GKE cluster and destroy whenever the kubernetes jobs done.

https://youtu.be/dI87ccu3jw4

Thursday, December 8, 2016

Tuesday, November 15, 2016

Docker Registry - How to use Local Host Volume or OpenStack Swift as Docker Image Repository

***outline***

1. Leverage Docker Registry Container to allow Docker Image Repository in Local Host Disk Volume ( Local Directory2. Build and Leverage Docker Registry Container ( v1 ) to allow Remote Docker Image Repository on OpenStack Swift

a. Build a Customized Docker Registry Container Image

b. Push Customized Docker Registry Image

c. Push Customized Docker Image ( Ubuntu with ffmpeg and Python SwiftClient )

3. Leverage Docker Registry Container ( v2 ) image directly

a. Docker Run standard Registry Image directly

b. Fetch configuration file into container

4. Setup insecure access from remote

***Use Local as Docker Image Repository***

===docker run registry container=== $ docker run -d -p 5000:5000 --restart=always --name registry registry:2 Unable to find image 'registry:2' locally 2: Pulling from library/registry Digest: sha256:1152291c7f93a4ea2ddc95e46d142c31e743b6dd70e194af9e6ebe530f782c17 Status: Downloaded newer image for registry:2 Ed13754c8994680f9efd12d58ea3d36632c4be7a4a825f65c9cf5793e1949e5a ===Or point the docker registry at special "host" directory for running registry container=== $ docker run -d -p 5000:5000 --restart=always --name registry \ -v `pwd`/data:/var/lib/registry \ registry:2 ===docker volume=== PS: -v for volumen, -v <host directory>:<container directory> For example: -v /src/webapp:/webapp This is mounts the host directory, /src/webapp, into the container at /webapp. If the path /webapp already exists inside the container's image, the /src/webapp mount overlays but does not remove the pre-existing content ===docker pull image your want=== user@ubuntu:~/Desktop/registry$ docker pull hello-world or user@ubuntu:~/Desktop/registry$ docker pull chianingwang/ffmpeg_swift $e.g $ docker pull hello-world output Using default tag: latest latest: Pulling from library/hello-world c04b14da8d14: Pull complete Digest: sha256:0256e8a36e2070f7bf2d0b0763dbabdd67798512411de4cdcf9431a1feb60fd9 Status: Downloaded newer image for hello-world:latest ===docker tag image=== user@ubuntu:~/Desktop/registry$ docker tag hello-world localhost:5000/myfirstimage Or user@ubuntu:~/Desktop/registry$ docker tag chianingwang/ffmpeg_swift localhost:5000/ffmpeg_swift ===docker push image back to repository=== user@ubuntu:~/Desktop/registry$ docker push localhost:5000/myfirstimage The push refers to a repository [localhost:5000/myfirstimage] a02596fdd012: Pushed latest: digest: sha256:a18ed77532f6d6781500db650194e0f9396ba5f05f8b50d4046b294ae5f83aa4 size: 524 OR user@ubuntu:~/Desktop/registry$ docker push localhost:5000/ffmpeg_swift The push refers to a repository [localhost:5000/ffmpeg_swift] f83e8a3fdfe9: Pushed d6ceb43d6adb: Pushed 91300e2dcc40: Pushed cb06ae3a1786: Pushed 0e20f4f8a593: Pushed 1633f88f8c9f: Pushed 46c98490f575: Pushed 8ba4b4ea187c: Pushed c854e44a1a5a: Pushed latest: digest: sha256:34e64669bf4d2b38ea8c03f37ecd28b9f7f335d5bed263aaa86ca28f57c846c9 size: 2200 ===docker pull for testing=== user@ubuntu:~/Desktop/registry$ docker pull localhost:5000/ffmpeg_swift Using default tag: latest latest: Pulling from myfirstimage Digest: sha256:a18ed77532f6d6781500db650194e0f9396ba5f05f8b50d4046b294ae5f83aa4 Status: Image is up to date for localhost:5000/myfirstimage:latest ===docker remove registry container=== $ docker stop registry && docker rm -v registry registry Registry ===docker pull testing against with docker hub and local=== user@ubuntu:/var$ time docker pull chianingwang/ffmpeg_swift Using default tag: latest latest: Pulling from chianingwang/ffmpeg_swift Digest: sha256:34e64669bf4d2b38ea8c03f37ecd28b9f7f335d5bed263aaa86ca28f57c846c9 Status: Image is up to date for chianingwang/ffmpeg_swift:latest real 0m1.566s user 0m0.016s sys 0m0.024s user@ubuntu:/var$ time docker pull localhost:5000/myfirstimage Using default tag: latest latest: Pulling from myfirstimage Digest: sha256:34e64669bf4d2b38ea8c03f37ecd28b9f7f335d5bed263aaa86ca28f57c846c9 Status: Image is up to date for localhost:5000/myfirstimage:latest real 0m0.077s user 0m0.024s sys 0m0.024s***Use Swift as Docker Image Repository***

If your Swift Auth is v1 then you can do either build your own customized registry image and create container base on it or you can simulate swift auth credential from v1 to v2. The example as below might give you more detail.

In reality is current most update docker registry image won't work with auth/v1.0 and only work for auth/v2.0 or later.

user@ubuntu:~/Desktop$ mkdir registry user@ubuntu:~/Desktop$ cd registry user@ubuntu:~/Desktop/registry$ git clone https://github.com/docker/docker-registry.git Cloning into 'docker-registry'... remote: Counting objects: 7007, done. remote: Total 7007 (delta 0), reused 0 (delta 0), pack-reused 7007 Receiving objects: 100% (7007/7007), 1.69 MiB | 2.16 MiB/s, done. Resolving deltas: 100% (4101/4101), done. Checking connectivity... done. ===vi Dockerfile and put content as below in there=== user@ubuntu:~/Desktop/registry$ vi Dockerfile PS:Build a registry-swift Image The official registry container image does not include the Swift storage driver. Nor does it include an option in the sample configuration to override the driver's swift_auth_version from its default value of 2.

You need both to work with Swift Object Storage. You can get both by building your own image starting from the official registry image via Dockerfile On your VM, create a Dockerfile and fill it with the following commands. ===I try newer registry image but newer registry is not supporting during my testing=== user@ubuntu:~/Desktop/registry$ cat Dockerfile #start from a registry release known to work FROM registry:0.7.3 # get the swift driver for the registry RUN pip install docker-registry-driver-swift==0.0.1 # Swift uses v1 auth and the sample config doesn't have an option # for it so inject one RUN sed -i '91i\ swift_auth_version: _env:OS_AUTH_VERSION' /docker-registry/config/config_sample.yml user@ubuntu:~/Desktop/registry$ docker build -t chianingwang/registry-swift:0.7.3 . Sending build context to Docker daemon 2.567 MB Step 1 : FROM registry:0.7.3 0.7.3: Pulling from library/registry a3ed95caeb02: Pull complete 831a6feb5ab2: Pull complete b32559aac4de: Pull complete 5e99535a7b44: Pull complete aa076096cff1: Pull complete 423664404a49: Pull complete a80e194b6d44: Pull complete b77ea15f690f: Pull complete b6de41721ec3: Pull complete 9b59b437f9f7: Pull complete d76adb3b394d: Pull complete 60dcc9127244: Pull complete 9e72c0494fc3: Pull complete 936a60cdd427: Pull complete Digest: sha256:e3ba9e4eba5320844c0a09bbe1a964c8c43758dcea5ddda042d1ceb88e6a00ff Status: Downloaded newer image for registry:0.7.3 ---> 89eba9a6d8af Step 2 : RUN pip install docker-registry-driver-swift==0.0.1 ---> Running in 8531b6e481d5 Downloading/unpacking docker-registry-driver-swift==0.0.1 Downloading docker-registry-driver-swift-0.0.1.tar.gz Running setup.py (path:/tmp/pip_build_root/docker-registry-driver-swift/setup.py) egg_info for package docker-registry-driver-swift Downloading/unpacking python-swiftclient>=2.0 (from docker-registry-driver-swift==0.0.1) Requirement already satisfied (use --upgrade to upgrade): docker-registry-core>=1,<2 in /usr/local/lib/python2.7/dist-packages (from docker-registry-driver-swift==0.0.1) Requirement already satisfied (use --upgrade to upgrade): six>=1.5.2 in /usr/lib/python2.7/dist-packages (from python-swiftclient>=2.0->docker-registry-driver-swift==0.0.1) Downloading/unpacking futures>=3.0 (from python-swiftclient>=2.0->docker-registry-driver-swift==0.0.1) Downloading futures-3.0.5-py2-none-any.whl Requirement already satisfied (use --upgrade to upgrade): requests>=1.1 in /usr/local/lib/python2.7/dist-packages (from python-swiftclient>=2.0->docker-registry-driver-swift==0.0.1) Requirement already satisfied (use --upgrade to upgrade): redis==2.9.1 in /usr/local/lib/python2.7/dist-packages (from docker-registry-core>=1,<2->docker-registry-driver-swift==0.0.1) Requirement already satisfied (use --upgrade to upgrade): boto==2.27.0 in /usr/local/lib/python2.7/dist-packages (from docker-registry-core>=1,<2->docker-registry-driver-swift==0.0.1) Installing collected packages: docker-registry-driver-swift, python-swiftclient, futures Running setup.py install for docker-registry-driver-swift Skipping installation of /usr/local/lib/python2.7/dist-packages/docker_registry/__init__.py (namespace package) Skipping installation of /usr/local/lib/python2.7/dist-packages/docker_registry/drivers/__init__.py (namespace package) Installing /usr/local/lib/python2.7/dist-packages/docker_registry_driver_swift-0.0.1-nspkg.pth Successfully installed docker-registry-driver-swift python-swiftclient futures Cleaning up... ---> a7166079ff89 Removing intermediate container 8531b6e481d5 Step 3 : RUN sed -i '91i\ swift_auth_version: _env:OS_AUTH_VERSION' /docker-registry/config/config_sample.yml ---> Running in fecabee560d7 ---> 62fe241d46bc Removing intermediate container fecabee560d7 Successfully built 62fe241d46bc ===docker run a container name chianingwang/registry-swift:0.7.3=== user@ubuntu:~/Desktop/registry$ docker run -it -d \ -e SETTINGS_FLAVOR=swift \ -e OS_AUTH_URL='http://172.28.128.4/auth/v1.0' \ -e OS_AUTH_VERSION=1 \ -e OS_USERNAME='ss' \ -e OS_PASSWORD='ss' \ -e OS_CONTAINER='docker-registry' \ -e GUNICORN_WORKERS=8 \ -p 127.0.0.1:5000:5000 \ chianingwang/registry-swift:0.7.3 33c1bf492186312417fd334b6c179145a2c2792fb70d450811229f3dc0d24710 ===chianingwang is my docker hub account, I think you can use any=== user@ubuntu:~/Desktop/registry$ docker tag chianingwang/registry-swift:0.7.3 127.0.0.1:5000/chianingwang/registry-swift:0.7.3

PS: <Repository>/<Image>

e.g.: 127.0.0.1:5000/registry-swift:0.7.3

===push to swift container call "docker-registry"=== user@ubuntu:~/Desktop/registry$ docker push 127.0.0.1:5000/chianingwang/registry-swift:0.7.3 The push refers to a repository [127.0.0.1:5000/chianingwang/registry-swift] 0b0a944a7424: Image successfully pushed ba63d14c7eb1: Image successfully pushed 5f70bf18a086: Image successfully pushed 82e832bb77b7: Image successfully pushed f35db88c0ec5: Image successfully pushed b604b17eb6f0: Image successfully pushed 1ef0390143c5: Image successfully pushed 96c54000b8e7: Image successfully pushed 91d310d4dfdc: Image successfully pushed 5585f477130c: Image successfully pushed d231e7d76582: Image successfully pushed 02594e52fdc5: Image successfully pushed ceddf6185bdf: Image successfully pushed 874f34b4a164: Image successfully pushed 1ed6001661c5: Image successfully pushed 3d841e359484: Image successfully pushed Pushing tag for rev [62fe241d46bc] on {http://127.0.0.1:5000/v1/repositories/chianingwang/registry-swift/tags/0.7.3} ===display result=== user@ubuntu:~/Desktop/registry$ swift -A http://172.28.128.4/auth/v1.0 -U ss -K ss list docker-registry registry/images/011902a6c2f2acb7896c9045d5f3cc85c1c03ca6dd7349aaa91a830ce985bd69/_checksum registry/images/011902a6c2f2acb7896c9045d5f3cc85c1c03ca6dd7349aaa91a830ce985bd69/ancestry registry/images/011902a6c2f2acb7896c9045d5f3cc85c1c03ca6dd7349aaa91a830ce985bd69/json registry/images/011902a6c2f2acb7896c9045d5f3cc85c1c03ca6dd7349aaa91a830ce985bd69/layer registry/images/013a8d70c9468974a361f88e023e9150bc3d9ecbb319a64b65415b888382d2d4/_checksum registry/images/013a8d70c9468974a361f88e023e9150bc3d9ecbb319a64b65415b888382d2d4/ancestry registry/images/013a8d70c9468974a361f88e023e9150bc3d9ecbb319a64b65415b888382d2d4/json registry/images/013a8d70c9468974a361f88e023e9150bc3d9ecbb319a64b65415b888382d2d4/layer registry/images/19c8e070c616910eeb634e582f0bd98c4b6c84607df0934ec2bafd3dfc7ebeb2/_checksum registry/images/19c8e070c616910eeb634e582f0bd98c4b6c84607df0934ec2bafd3dfc7ebeb2/ancestry registry/images/19c8e070c616910eeb634e582f0bd98c4b6c84607df0934ec2bafd3dfc7ebeb2/json registry/images/19c8e070c616910eeb634e582f0bd98c4b6c84607df0934ec2bafd3dfc7ebeb2/layer registry/images/3f97b2fe4ed247923e93be4ace48588e7d888b592035ce4e813823760b9ad26c/_checksum registry/images/3f97b2fe4ed247923e93be4ace48588e7d888b592035ce4e813823760b9ad26c/ancestry registry/images/3f97b2fe4ed247923e93be4ace48588e7d888b592035ce4e813823760b9ad26c/json registry/images/3f97b2fe4ed247923e93be4ace48588e7d888b592035ce4e813823760b9ad26c/layer registry/images/5f2e9b4054ba78cfd2e076dd8f0aad16ed3b7509b6d12f82f2691777d128c7e5/_checksum registry/images/5f2e9b4054ba78cfd2e076dd8f0aad16ed3b7509b6d12f82f2691777d128c7e5/ancestry registry/images/5f2e9b4054ba78cfd2e076dd8f0aad16ed3b7509b6d12f82f2691777d128c7e5/json registry/images/5f2e9b4054ba78cfd2e076dd8f0aad16ed3b7509b6d12f82f2691777d128c7e5/layer registry/images/5f70bf18a086007016e948b04aed3b82103a36bea41755b6cddfaf10ace3c6ef/_checksum registry/images/5f70bf18a086007016e948b04aed3b82103a36bea41755b6cddfaf10ace3c6ef/ancestry registry/images/5f70bf18a086007016e948b04aed3b82103a36bea41755b6cddfaf10ace3c6ef/json registry/images/5f70bf18a086007016e948b04aed3b82103a36bea41755b6cddfaf10ace3c6ef/layer registry/images/62fe241d46bc158d5831cbe6055f1d361e28daeb093d2d7d1e8d1106961b871e/_checksum registry/images/62fe241d46bc158d5831cbe6055f1d361e28daeb093d2d7d1e8d1106961b871e/ancestry registry/images/62fe241d46bc158d5831cbe6055f1d361e28daeb093d2d7d1e8d1106961b871e/json registry/images/62fe241d46bc158d5831cbe6055f1d361e28daeb093d2d7d1e8d1106961b871e/layer registry/images/7476fb1fbb436b12d7df5f6eab4bfb1ef3b474cebb14477cc1ecd3526ff52a0e/_checksum registry/images/7476fb1fbb436b12d7df5f6eab4bfb1ef3b474cebb14477cc1ecd3526ff52a0e/ancestry registry/images/7476fb1fbb436b12d7df5f6eab4bfb1ef3b474cebb14477cc1ecd3526ff52a0e/json registry/images/7476fb1fbb436b12d7df5f6eab4bfb1ef3b474cebb14477cc1ecd3526ff52a0e/layer registry/images/75cce76944525454cfdd9d6b98c3278b011f17176f7f2957509fece6cd82f9f0/_checksum registry/images/75cce76944525454cfdd9d6b98c3278b011f17176f7f2957509fece6cd82f9f0/ancestry registry/images/75cce76944525454cfdd9d6b98c3278b011f17176f7f2957509fece6cd82f9f0/json registry/images/75cce76944525454cfdd9d6b98c3278b011f17176f7f2957509fece6cd82f9f0/layer registry/images/80222d5d1fa4b5ce046268bf4c0fc7d6d4b97214ea4deccd5a8ffdd4bf71da9d/_checksum registry/images/80222d5d1fa4b5ce046268bf4c0fc7d6d4b97214ea4deccd5a8ffdd4bf71da9d/ancestry registry/images/80222d5d1fa4b5ce046268bf4c0fc7d6d4b97214ea4deccd5a8ffdd4bf71da9d/json registry/images/80222d5d1fa4b5ce046268bf4c0fc7d6d4b97214ea4deccd5a8ffdd4bf71da9d/layer registry/images/85e579e8212bbccedc09b829bda10498f4901161858999e79d9c277c308abc8d/_checksum registry/images/85e579e8212bbccedc09b829bda10498f4901161858999e79d9c277c308abc8d/ancestry registry/images/85e579e8212bbccedc09b829bda10498f4901161858999e79d9c277c308abc8d/json registry/images/85e579e8212bbccedc09b829bda10498f4901161858999e79d9c277c308abc8d/layer registry/images/8cecfbb6b0609ba2c24113b2ee51231091453c2ecac8624e7600efe3ae18dff0/_checksum registry/images/8cecfbb6b0609ba2c24113b2ee51231091453c2ecac8624e7600efe3ae18dff0/ancestry registry/images/8cecfbb6b0609ba2c24113b2ee51231091453c2ecac8624e7600efe3ae18dff0/json registry/images/8cecfbb6b0609ba2c24113b2ee51231091453c2ecac8624e7600efe3ae18dff0/layer registry/images/90dca6dc942b121b693d193595588f258ee75a1404977f62e70bb4ef757a239a/_checksum registry/images/90dca6dc942b121b693d193595588f258ee75a1404977f62e70bb4ef757a239a/ancestry registry/images/90dca6dc942b121b693d193595588f258ee75a1404977f62e70bb4ef757a239a/json registry/images/90dca6dc942b121b693d193595588f258ee75a1404977f62e70bb4ef757a239a/layer registry/images/981c00e17acce66ecae497d27c7e6e62f8aa72071851b0043c894cb74fc0e15a/_checksum registry/images/981c00e17acce66ecae497d27c7e6e62f8aa72071851b0043c894cb74fc0e15a/ancestry registry/images/981c00e17acce66ecae497d27c7e6e62f8aa72071851b0043c894cb74fc0e15a/json registry/images/981c00e17acce66ecae497d27c7e6e62f8aa72071851b0043c894cb74fc0e15a/layer registry/images/b4839ba992958b5c8e178f11efa411921862a25a12ee2126200bbc0a1083091c/_checksum registry/images/b4839ba992958b5c8e178f11efa411921862a25a12ee2126200bbc0a1083091c/ancestry registry/images/b4839ba992958b5c8e178f11efa411921862a25a12ee2126200bbc0a1083091c/json registry/images/b4839ba992958b5c8e178f11efa411921862a25a12ee2126200bbc0a1083091c/layer registry/images/d36d086e4f3cfecf4aaa9b6b72b257d577d8e92e2a44ec75f3e0254973983e05/_checksum registry/images/d36d086e4f3cfecf4aaa9b6b72b257d577d8e92e2a44ec75f3e0254973983e05/ancestry registry/images/d36d086e4f3cfecf4aaa9b6b72b257d577d8e92e2a44ec75f3e0254973983e05/json registry/images/d36d086e4f3cfecf4aaa9b6b72b257d577d8e92e2a44ec75f3e0254973983e05/layer registry/images/d551631b3fe7c23ef4a83cc2d3e60370655c451e4ffda00e78f291c9468f4b01/_checksum registry/images/d551631b3fe7c23ef4a83cc2d3e60370655c451e4ffda00e78f291c9468f4b01/ancestry registry/images/d551631b3fe7c23ef4a83cc2d3e60370655c451e4ffda00e78f291c9468f4b01/json registry/images/d551631b3fe7c23ef4a83cc2d3e60370655c451e4ffda00e78f291c9468f4b01/layer registry/images/dfb1385e4dc80b9188dbfbcb13dc59c2517c1e3d11f0b4f2dd301f2182634900/_checksum registry/images/dfb1385e4dc80b9188dbfbcb13dc59c2517c1e3d11f0b4f2dd301f2182634900/ancestry registry/images/dfb1385e4dc80b9188dbfbcb13dc59c2517c1e3d11f0b4f2dd301f2182634900/json registry/images/dfb1385e4dc80b9188dbfbcb13dc59c2517c1e3d11f0b4f2dd301f2182634900/layer registry/images/e08b305ea4291fc8a26594d87b43ab897ea9f70f4a7497af25dceb59df5248dd/_checksum registry/images/e08b305ea4291fc8a26594d87b43ab897ea9f70f4a7497af25dceb59df5248dd/ancestry registry/images/e08b305ea4291fc8a26594d87b43ab897ea9f70f4a7497af25dceb59df5248dd/json registry/images/e08b305ea4291fc8a26594d87b43ab897ea9f70f4a7497af25dceb59df5248dd/layer registry/images/e19ec885183a101eb73137de14035d58843f2a8522d7bfbaac341de1bed7c149/_checksum registry/images/e19ec885183a101eb73137de14035d58843f2a8522d7bfbaac341de1bed7c149/ancestry registry/images/e19ec885183a101eb73137de14035d58843f2a8522d7bfbaac341de1bed7c149/json registry/images/e19ec885183a101eb73137de14035d58843f2a8522d7bfbaac341de1bed7c149/layer registry/images/fd2fdd8628c815b29002c95d2a754d3e3ba8c055479f73bc0ce9f8c0edaa6402/_checksum registry/images/fd2fdd8628c815b29002c95d2a754d3e3ba8c055479f73bc0ce9f8c0edaa6402/ancestry registry/images/fd2fdd8628c815b29002c95d2a754d3e3ba8c055479f73bc0ce9f8c0edaa6402/json registry/images/fd2fdd8628c815b29002c95d2a754d3e3ba8c055479f73bc0ce9f8c0edaa6402/layer registry/repositories/chianingwang/registry-swift/_index_images registry/repositories/chianingwang/registry-swift/tag0.7.3_json registry/repositories/chianingwang/registry-swift/tag_0.7.3 ===test download=== user@ubuntu:~/Desktop/K8S$ docker pull 127.0.0.1:5000/chianingwang/registry-swift:0.7.3 Pulling repository 127.0.0.1:5000/chianingwang/registry-swift 62fe241d46bc: Already exists 5f70bf18a086: Already exists b4839ba99295: Already exists e19ec885183a: Already exists 3f97b2fe4ed2: Already exists 8cecfbb6b060: Already exists 981c00e17acc: Already exists 75cce7694452: Already exists 85e579e8212b: Already exists d551631b3fe7: Already exists 19c8e070c616: Already exists 80222d5d1fa4: Already exists 7476fb1fbb43: Already exists fd2fdd8628c8: Already exists 90dca6dc942b: Already exists d36d086e4f3c: Already exists 5f2e9b4054ba: Already exists e08b305ea429: Already exists 011902a6c2f2: Already exists 013a8d70c946: Already exists dfb1385e4dc8: Already exists Status: Image is up to date for 127.0.0.1:5000/chianingwang/registry-swift:0.7.3 127.0.0.1:5000/chianingwang/registry-swift: this image was pulled from a legacy registry. Important: This registry version will not be supported in future versions of docker. ===double check docker image again=== user@ubuntu:~/Desktop/registry$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE 127.0.0.1:5000/chianingwang/registry-swift latest 1d1d0b4bbf11 5 minutes ago 425.3 MB chianingwang/registry-swift latest 1d1d0b4bbf11 5 minutes ago 425.3 MB chianingwang/ffmpeg_swift latest 077773661f73 5 days ago 480 MB PS: you can see 127.0.0.1:5000/chianingwang/registry-swift list there.***now upload customized image via running registry container***

After we push container registry image, now we are trying to push our customized image ===Becase we have registry container running now === user@ubuntu:~/Desktop/registry$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 31fbf0be8e91 chianingwang/registry-swift "/bin/sh -c 'exec doc" 8 minutes ago Up 8 minutes 127.0.0.1:5000->5000/tcp modest_wozniak ===tag our costomized image at localhost(127.0.0.1):5000=== user@ubuntu:~/Desktop/registry$ docker tag chianingwang/ffmpeg_swift 127.0.0.1:5000/chianingwang/ffmpeg_swift PS: docker registry is running on web service server at localhost with port 5000. Thus we tag from chianingwang/ffmpeg_swift ( docker hub ) to 127.0.0.1:5000 ===double check docker images=== user@ubuntu:~/Desktop/registry$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE 127.0.0.1:5000/chianingwang/registry-swift latest 1d1d0b4bbf11 6 minutes ago 425.3 MB chianingwang/registry-swift latest 1d1d0b4bbf11 6 minutes ago 425.3 MB 127.0.0.1:5000/chianingwang/ffmpeg_swift latest 077773661f73 5 days ago 480 MB chianingwang/ffmpeg_swift latest 077773661f73 5 days ago 480 MB PS: you can see new images (127.0.0.1:5000/chianingwang/ffmpeg_swift) register at 127.0.0.1:5000 is in the list now.

PS: if you would like to untag, you can try $ docker rmi 127.0.0.1:5000/chianingwang/registry-swift ===docker push customized image to docker hub=== user@ubuntu:~/Desktop/registry$ docker push 127.0.0.1:5000/chianingwang/ffmpeg_swift The push refers to a repository [127.0.0.1:5000/chianingwang/ffmpeg_swift] f83e8a3fdfe9: Image successfully pushed d6ceb43d6adb: Image successfully pushed 91300e2dcc40: Image successfully pushed cb06ae3a1786: Image successfully pushed 0e20f4f8a593: Image successfully pushed 1633f88f8c9f: Image successfully pushed 46c98490f575: Image successfully pushed 8ba4b4ea187c: Image successfully pushed c854e44a1a5a: Image successfully pushed Pushing tag for rev [077773661f73] on {http://127.0.0.1:5000/v1/repositories/chianingwang/ffmpeg_swift/tags/latest} ===docker pull to see whether the image will be pulled from swift=== user@ubuntu:~/Desktop/registry$ docker pull 127.0.0.1:5000/chianingwang/ffmpeg_swift Using default tag: latest Pulling repository 127.0.0.1:5000/chianingwang/ffmpeg_swift 077773661f73: Already exists c854e44a1a5a: Already exists dafccc932aec: Already exists 5d232b0ea43a: Already exists cebd67936ff8: Already exists 3b25e17d01de: Already exists 0ad56157abbe: Already exists b6d124e3b29f: Already exists 8bb588cd10a1: Already exists Status: Image is up to date for 127.0.0.1:5000/chianingwang/ffmpeg_swift:latest 127.0.0.1:5000/chianingwang/ffmpeg_swift: this image was pulled from a legacy registry. Important: This registry version will not be supported in future versions of docker.

***leverage standard docker registry image to build the registry container for swift auth 1.0***

===prepare config.yml under /etc/docker/registry/===

Prepare a config.yml for swift auth 1.0 as below. Be aware, registry:2 only support the swift auth v2 or the version afterward. Thus, tenant have to be configured.

------------

version: 0.1

log:

level: debug

fields:

service: registry

storage:

cache:

blobdescriptor: inmemory

swift:

username: demo

password: xxx

authurl: https://xxx.com/auth/v2.0

tenant: AUTH_demo

insecureskipverify: true

container: docker-registry

rootdirectory: /swift/object/name/prefix

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3

------------

or you might try this config

------------

version: 0.1

log:

level: debug

fields:

service: registry

storage:

cache:

blobdescriptor: inmemory

swift:

username: AUTH_demo:demo

password: xxx

authurl: https://xxx.com/auth/v1.0

insecureskipverify: true

container: docker-registry

rootdirectory: /swift/object/name/prefix

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3

------------

===docker run registry and inject config.yml in container under the same directory which is /etc/docker/registry/config.yml===

$ docker run -d -p 5000:5000 --restart=always --name registry -v `pwd`/config.yml:/etc/docker/registry/config.yml registry:2

a821cbd81e535c369ad68a28aaca3434b55ea8a6878cf25f779bbd602291303b

===double check===

$ docker ps -a | grep registry

a821cbd81e53 registry:2 "/entrypoint.sh /etc/" 6 seconds ago Up 6 seconds 0.0.0.0:5000->5000/tcp registry

===test with busybox ( tag image with diff repository )===

$ docker pull busybox && docker tag busybox localhost:5000/busybox

Using default tag: latest latest: Pulling from library/busybox 4b0bc1c4050b: Pull complete Digest: sha256:817a12c32a39bbe394944ba49de563e085f1d3c5266eb8e9723256bc4448680e Status: Downloaded newer image for busybox:latest

===test with docker push===

$ docker push localhost:5000/busybox

The push refers to a repository [localhost:5000/busybox]

38ac8d0f5bb3: Pushed

latest: digest: sha256:817a12c32a39bbe394944ba49de563e085f1d3c5266eb8e9723256bc4448680e size: 527

===double check in swift===

$ curl -X GET https://xxx.com/v1/AUTH_demo/docker-registry/ -H "X-Auth-Token: AUTH_tkxxx"

files/docker/registry/v2/blobs/sha256/4b/4b0bc1c4050b03c95ef2a8e36e25feac42fd31283e8c30b3ee5df6b043155d3c/data

files/docker/registry/v2/blobs/sha256/79/7968321274dc6b6171697c33df7815310468e694ac5be0ec03ff053bb135e768/data

files/docker/registry/v2/blobs/sha256/81/817a12c32a39bbe394944ba49de563e085f1d3c5266eb8e9723256bc4448680e/data

files/docker/registry/v2/repositories/busybox/_layers/sha256/4b0bc1c4050b03c95ef2a8e36e25feac42fd31283e8c30b3ee5df6b043155d3c/link

files/docker/registry/v2/repositories/busybox/_layers/sha256/7968321274dc6b6171697c33df7815310468e694ac5be0ec03ff053bb135e768/link

files/docker/registry/v2/repositories/busybox/_manifests/revisions/sha256/817a12c32a39bbe394944ba49de563e085f1d3c5266eb8e9723256bc4448680e/link

files/docker/registry/v2/repositories/busybox/_manifests/tags/latest/current/link

files/docker/registry/v2/repositories/busybox/_manifests/tags/latest/index/sha256/817a12c32a39bbe394944ba49de563e085f1d3c5266eb8e9723256bc4448680e/link

segments/2f6/46f636b65722f72656769737472792f76322f7265706f7369746f726965732f62757379626f782f5f75706c6f6164732f39363664393139632d383761322d346337342d393136332d6364313131333132646662312f64617461f89b305f8216ee617b4639336f73e955c4dde449b03bda8d52bdf681214fd63ada39a3ee5e6b4b0d3255bfef95601890afd80709/0000000000000001

segments/2f6/46f636b65722f72656769737472792f76322f7265706f7369746f726965732f62757379626f782f5f75706c6f6164732f65393032646235332d663333612d346433312d623839662d3337363539393266313161382f6461746188833e2988a709f7810f9edfd31f6cb48e16804c8bb6c027f2ad83560d1627c3da39a3ee5e6b4b0d3255bfef95601890afd80709/0000000000000001

***setup insecure connection from remote***

For connecting from remote to docker registry container, you can either leverage https ( self-sign or 3rd party cert ) or try insecure-registry connection for test only purpose.

For ubuntu 1404 you can try to add this in /etc/default/docker

DOCKER_OPTS="--insecure-registry=docker-registry:5000"

For ubuntu 1604 you need to add this in /etc/docker/dameon.json

{"insecure-registries":["192.168.xxx.xxx:5000"]}

===how to debug docker registry===

1.

docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 86e5dad1a2db registry:2 "/entrypoint.sh /e..." 5 months ago Up 18 minutes 0.0.0.0:443->5000/tcp registry

2.

# docker exec -it registry sh

/ # cat /etc/docker/registry/config.yml

version: 0.1

log:

level: debug

fields:

service: registry

3.

# docker logs -h

Flag shorthand -h has been deprecated, please use --help

Usage: docker logs [OPTIONS] CONTAINER

Fetch the logs of a container

Options:

--details Show extra details provided to logs

-f, --follow Follow log output

--help Print usage

--since string Show logs since timestamp (e.g. 2013-01-02T13:23:37) or relative (e.g. 42m for 42 minutes)

--tail string Number of lines to show from the end of the logs (default "all")

-t, --timestamps Show timestamps

4.

# docker logs registry --tail 2 time="2017-07-06T04:29:30.625145719Z" level=debug msg="swift.List(\"/\")" go.version=go1.7.3 instance.id=fa9cd8c9-9433-4f9f-9d26-903fcaddb52d service=registry trace.duration=9.045089ms trace.file="/go/src/github.com/docker/distribution/registry/storage/driver/base/base.go" trace.func="github.com/docker/distribution/registry/storage/driver/base.(*Base).List" trace.id=68e13ca7-4aa1-4c88-a47a-eb1a3fb342ac trace.line=150 version=v2.6.0 time="2017-07-06T04:29:40.626817962Z" level=debug msg="swift.List(\"/\")" go.version=go1.7.3 instance.id=fa9cd8c9-9433-4f9f-9d26-903fcaddb52d service=registry trace.duration=10.729464ms trace.file="/go/src/github.com/docker/distribution/registry/storage/driver/base/base.go" trace.func="github.com/docker/distribution/registry/storage/driver/base.(*Base).List" trace.id=2b3e1ba7-4d56-4fab-8756-1350191a7053 trace.line=150 version=v2.6.0

===reference===

https://mindtrove.info/docker-registry-softlayer-object-storage/

Tuesday, November 8, 2016

How to install kubernetes

I just want to quick share my personal experience for setting up kubernetes on docker.

As I mentioned above I'm using docker (http://chianingwang.blogspot.com/2015/02/docker-poc-lab-ubuntu-1404-via.html) as container platform, here are the steps for docker installation.

Docker Installation

for example, if you user is vagrant then you just try

$ sudo usermod -aG docker vagrant

if you are centOS, then try this https://docs.docker.com/engine/installation/linux/centos/

$ sudo yum update

$ sudo tee /etc/yum.repos.d/docker.repo <<-'EOF' [dockerrepo] name=Docker Repository baseurl=https://yum.dockerproject.org/repo/main/centos/7/ enabled=1 gpgcheck=1 gpgkey=https://yum.dockerproject.org/gpg EOF

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/centos/7/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

EOF

$ sudo yum install docker-engine

$ sudo systemctl enable docker.service

$ sudo systemctl start docker

Kubernetes Installation and Configuration

update sources.list.d for apt

Install required kubernetes binaries

1. Install Kubernetes basics

2. Start the Kubenetes Master Component

3. Exploring the cluster

Enable Networking Configure kubernetes cluster via kubectl

Configure kubernetes cluster via kubectl

Setup Cluster's Context

Test the cluster

1. Install kubenetes package preparation

CentOS

# setenforce 0

# yum install -y kubelet kubeadm kubectl kubernetes-cni

[vagrant@ss-12 ~]$ sudo su -

Last login: Fri Nov 18 19:11:47 UTC 2016 on pts/0

[root@ss-12 ~]# systemctl enable docker && systemctl start docker

[root@ss-12 ~]# systemctl enable kubelet && systemctl start kubelet

For example

$ sudo kubeadm init

Running pre-flight checks

<master/tokens> generated token: "c9e394.b98e34623bf468f4"

<master/pki> generated Certificate Authority key and certificate:

Issuer: CN=kubernetes | Subject: CN=kubernetes | CA: true

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2026-11-16 19:14:06 +0000 UTC

Public: /etc/kubernetes/pki/ca-pub.pem

Private: /etc/kubernetes/pki/ca-key.pem

Cert: /etc/kubernetes/pki/ca.pem

<master/pki> generated API Server key and certificate:

Issuer: CN=kubernetes | Subject: CN=kube-apiserver | CA: false

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2017-11-18 19:14:06 +0000 UTC

Alternate Names: [10.0.2.15 10.96.0.1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local]

Public: /etc/kubernetes/pki/apiserver-pub.pem

Private: /etc/kubernetes/pki/apiserver-key.pem

Cert: /etc/kubernetes/pki/apiserver.pem

<master/pki> generated Service Account Signing keys:

Public: /etc/kubernetes/pki/sa-pub.pem

Private: /etc/kubernetes/pki/sa-key.pem

<master/pki> created keys and certificates in "/etc/kubernetes/pki"

<util/kubeconfig> created "/etc/kubernetes/kubelet.conf"

<util/kubeconfig> created "/etc/kubernetes/admin.conf"

<master/apiclient> created API client configuration

<master/apiclient> created API client, waiting for the control plane to become ready

<master/apiclient> all control plane components are healthy after 384.310122 seconds

<master/apiclient> waiting for at least one node to register and become ready

<master/apiclient> first node is ready after 3.004470 seconds

<master/apiclient> attempting a test deployment

<master/apiclient> test deployment succeeded

<master/discovery> created essential addon: kube-discovery, waiting for it to become ready

<master/discovery> kube-discovery is ready after 16.003111 seconds

<master/addons> created essential addon: kube-proxy

<master/addons> created essential addon: kube-dns

Kubernetes master initialised successfully!

You can now join any number of machines by running the following on each node:

To start using your cluster, you need to run (as a regular user):

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

$ kubectl taint nodes --all node-role.kubernetes.io/master-

node "ss02" untainted

# If you just want to run a All In On development environment then you just need to remove the "dedicated" taint from any node, includes master node. This mean the scheduler will be able to schedule pods everywhere.

$ kubectl taint nodes --all dedicated-

# kubectl get pods --all-namespaces

The kubectl cli tries to reach API server on port 8080

for example:

$ curl http://localhost:8080

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1alpha1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1alpha1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}

cluster "local" set.

preparation

# sudo apt-get update && apt-get install -y apt-transport-https

# sudo curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

# sudo su -

#sudo cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

# exit

=================for build k8s cluster================

# vi k8s.sh

sudo apt-get update -y

sudo apt-get install -y docker.io

sudo usermod -aG docker $(whoami)

sudo apt-get install -y kubelet kubeadm kubectl kubernetes-cni

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl apply -f https://git.io/weave-kube

kubectl config use-context kubernetes-admin@kubernetes

#options

#kubectl label nodes ss03 nodenum=ss03

=================for test yaml create pod================

# vi test.yaml

apiVersion : batch/v1

kind: Job

metadata:

name: demo1

spec:

activeDeadlineSeconds: 300

template:

metadata:

name: demo1

spec:

volumes:

- name: data

emptyDir:

medium: Memory

containers:

- name: demo1-1

image: swiftstack/alpine_bash_ffmpeg_swift

imagePullPolicy: IfNotPresent

command:

- "ping"

- "-c"

- "3"

- "cloud.swiftstack.com"

volumeMounts:

- mountPath: /data

name: data

nodeSelector:

nodenum: ss03

restartPolicy: Never

# kubectl create -f test.yaml

# kubectl describe job demo1 Name: demo1 Namespace: default Selector: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: <none> Parallelism: 1 Completions: 1 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Active Deadline Seconds: 300s Pods Statuses: 1 Running / 0 Succeeded / 0 Failed Pod Template: Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Containers: demo1-1: Image: swiftstack/alpine_bash_ffmpeg_swift Port: <none> Command: ping -c 3 cloud.swiftstack.com Environment: <none> Mounts: /data from data (rw) Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 16s job-controller Created pod: demo1-bkfld

# kubectl describe pod demo1-bkfld Name: demo1-bkfld Namespace: default Node: ss03/10.0.2.15 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"Job","namespace":"default","name":"demo1","uid":"e65339a7-d48c-11e7-bca2-0214683e8447","apiVersion":"batch... Status: Pending IP: Created By: Job/demo1 Controlled By: Job/demo1 Containers: demo1-1: Container ID: Image: swiftstack/alpine_bash_ffmpeg_swift Image ID: Port: <none> Command: ping -c 3 cloud.swiftstack.com State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: <none> Mounts: /data from data (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token-r82wn (ro) Conditions: Type Status Initialized True Ready False PodScheduled True Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory default-token-r82wn: Type: Secret (a volume populated by a Secret) SecretName: default-token-r82wn Optional: false QoS Class: BestEffort Node-Selectors: nodenum=ss03 Tolerations: node.alpha.kubernetes.io/notReady:NoExecute for 300s node.alpha.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 43s default-scheduler Successfully assigned demo1-bkfld to ss03 Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "data" Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "default-token-r82wn" Warning FailedCreatePodSandBox 43s kubelet, ss03 Failed create pod sandbox. Warning FailedSync 3s (x5 over 43s) kubelet, ss03 Error syncing pod Normal SandboxChanged 3s (x4 over 42s) kubelet, ss03 Pod sandbox changed, it will be killed and re-created.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ss03 Ready master 9m v1.8.4

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo1-bkfld 0/1 ContainerCreating 0 2m

$ kubectl get jobs

NAME DESIRED SUCCESSFUL AGE

demo1 1 0 2m

As I mentioned above I'm using docker (http://chianingwang.blogspot.com/2015/02/docker-poc-lab-ubuntu-1404-via.html) as container platform, here are the steps for docker installation.

Docker Installation

for example, if you user is vagrant then you just try

$ sudo usermod -aG docker vagrant

if you are centOS, then try this https://docs.docker.com/engine/installation/linux/centos/

$ sudo yum update

$ sudo tee /etc/yum.repos.d/docker.repo <<-'EOF' [dockerrepo] name=Docker Repository baseurl=https://yum.dockerproject.org/repo/main/centos/7/ enabled=1 gpgcheck=1 gpgkey=https://yum.dockerproject.org/gpg EOF

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/centos/7/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

EOF

$ sudo yum install docker-engine

$ sudo systemctl enable docker.service

$ sudo systemctl start docker

Kubernetes Installation and Configuration

update sources.list.d for apt

- Kubeadm: admin tool to simplify starting K8S cluster

- "kubeadm". The kubeadmn tool simplifies the process of starting a Kubernetes cluster.

- Kubectl: command line

- Kubelet: node manager

- To use kubeadm we'll also need the kubectl cluster CLI tool and the kubelet node manager.

- Kubecni: cni-container network interface

- We'll also install Kubernetes CNI (Container Network Interface) support for multihost networking should we add additional nodes.

Install required kubernetes binaries

1. Install Kubernetes basics

2. Start the Kubenetes Master Component

3. Exploring the cluster

Enable Networking

Configure kubernetes cluster via kubectl

Configure kubernetes cluster via kubectlSetup Cluster's Context

$ wget -qO- https://get.docker.com/ | sh

###user to the “docker” group execute the following command:

$ sudo usermod -aG docker <user>

$ sudo reboot

###Verify Docker Installation

$ docker --version

Or

$ sudo systemctl status docker

Or

$ ls -l /var/run/docker.sock

Or

$ docker version

( this should show you both client and server )

Or

$ docker info

After docker is ready then we can start to install the kubernetes.

a. # curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add

b. # cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

> deb http://apt.kubernetes.io/ kubernetes-xenial main

> EOF

c. # apt-get update

PS Kubernetes 1.4 adds support for the alpha tool:

This is for ubuntu 16.4, for other OS like CentOS or HypriotOS can reference official Kubernetes like here.

Ubuntu

Ubuntu

# apt-get install -y kubelet kubeadm kubectl kubernetes-cni

CentOS

# setenforce 0

# yum install -y kubelet kubeadm kubectl kubernetes-cni

[vagrant@ss-12 ~]$ sudo su -

Last login: Fri Nov 18 19:11:47 UTC 2016 on pts/0

[root@ss-12 ~]# systemctl enable docker && systemctl start docker

[root@ss-12 ~]# systemctl enable kubelet && systemctl start kubelet

# kubeadm init

For example

$ sudo kubeadm init

Running pre-flight checks

<master/tokens> generated token: "c9e394.b98e34623bf468f4"

<master/pki> generated Certificate Authority key and certificate:

Issuer: CN=kubernetes | Subject: CN=kubernetes | CA: true

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2026-11-16 19:14:06 +0000 UTC

Public: /etc/kubernetes/pki/ca-pub.pem

Private: /etc/kubernetes/pki/ca-key.pem

Cert: /etc/kubernetes/pki/ca.pem

<master/pki> generated API Server key and certificate:

Issuer: CN=kubernetes | Subject: CN=kube-apiserver | CA: false

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2017-11-18 19:14:06 +0000 UTC

Alternate Names: [10.0.2.15 10.96.0.1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local]

Public: /etc/kubernetes/pki/apiserver-pub.pem

Private: /etc/kubernetes/pki/apiserver-key.pem

Cert: /etc/kubernetes/pki/apiserver.pem

<master/pki> generated Service Account Signing keys:

Public: /etc/kubernetes/pki/sa-pub.pem

Private: /etc/kubernetes/pki/sa-key.pem

<master/pki> created keys and certificates in "/etc/kubernetes/pki"

<util/kubeconfig> created "/etc/kubernetes/kubelet.conf"

<util/kubeconfig> created "/etc/kubernetes/admin.conf"

<master/apiclient> created API client configuration

<master/apiclient> created API client, waiting for the control plane to become ready

<master/apiclient> all control plane components are healthy after 384.310122 seconds

<master/apiclient> waiting for at least one node to register and become ready

<master/apiclient> first node is ready after 3.004470 seconds

<master/apiclient> attempting a test deployment

<master/apiclient> test deployment succeeded

<master/discovery> created essential addon: kube-discovery, waiting for it to become ready

<master/discovery> kube-discovery is ready after 16.003111 seconds

<master/addons> created essential addon: kube-proxy

<master/addons> created essential addon: kube-dns

Kubernetes master initialised successfully!

You can now join any number of machines by running the following on each node:

# ps -fwwp `pgrep kubelet`

UID PID PPID C STIME TTY TIME CMD

root 817 1 3 11:25 ? 00:01:25 /usr/bin/kubelet --kubeconfig=/etc/kubernetes/kubelet.conf --require-kubeconfig=true --pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --cluster-dns=100.64.0.10 --cluster-domain=cluster.local --v=4

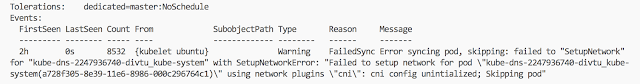

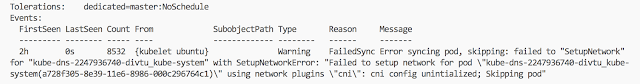

The very last line is the clue to our problem: "cni config unintialized". Our system is configured to use CNI but we have not installed a CNI networking system. We can easily add the Weave CNI VXLAN based container networking drivers using a POD spec from the Internet:

PS: if you try $ libel get pods --all-namespace, you should be able to see this error message at the end.

Thus, here we install the Weave CNI drivers.

You must install a pod network add-on so that your pods can communicate with each other.

It is necessary to do this before you try to deploy any applications to your cluster, and before

kube-dns will start up. Note also that kubeadm only supports CNI based networks and therefore kubenet based networks will not work.$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

node "ss02" untainted

# If you just want to run a All In On development environment then you just need to remove the "dedicated" taint from any node, includes master node. This mean the scheduler will be able to schedule pods everywhere.

$ kubectl taint nodes --all dedicated-

# kubectl apply -f https://git.io/weave-kube

daemonset "weave-net" created

This is for kube-system weave-net-2ifri

Kubernetes's components are leverage it's own container techniques. Thus we can check all the pods we created for running kubernetes

user@ubuntu:~$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-ubuntu 1/1 Running 1 5d

kube-system kube-apiserver-ubuntu 1/1 Running 3 5d

kube-system kube-controller-manager-ubuntu 1/1 Running 1 5d

kube-system kube-discovery-982812725-h210x 1/1 Running 1 5d

kube-system kube-dns-2247936740-swjx1 3/3 Running 3 5d

kube-system kube-proxy-amd64-s4hor 1/1 Running 1 5d

kube-system kube-scheduler-ubuntu 1/1 Running 1 5d

kube-system weave-net-2ifri

PS: The git.io site is a short url service from github. The weave-kube route points to a Kibernetes spec for a DaemonSet which is a resource that runs on every node in a cluster.

This Weave DaemonSet ensures that the weaveworks/weave-kube:1.7.1 image is running in a pod on all hosts, providing support for multi-host networking throughout the cluster.

This Weave DaemonSet ensures that the weaveworks/weave-kube:1.7.1 image is running in a pod on all hosts, providing support for multi-host networking throughout the cluster.

PS: Sometime kube-dns might fail because network hasn't configure properly. After you setup kubernetes network then everything will be coming back good slowly.

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dummy-2088944543-mn4d6 1/1 Running 0 10m

kube-system etcd-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-apiserver-ss-12.swiftstack.idv 1/1 Running 6 9m

kube-system kube-controller-manager-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-discovery-1150918428-pci3z 1/1 Running 0 10m

kube-system kube-dns-654381707-oom3u 2/3 Running 4 10m

kube-system kube-proxy-fqlgx 1/1 Running 0 10m

kube-system kube-scheduler-ss-12.swiftstack.idv 1/1 Running 0 10m

kube-system weave-net-xbaiq 2/2 Running 0 5m

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dummy-2088944543-mn4d6 1/1 Running 0 10m

kube-system etcd-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-apiserver-ss-12.swiftstack.idv 1/1 Running 6 9m

kube-system kube-controller-manager-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-discovery-1150918428-pci3z 1/1 Running 0 10m

kube-system kube-dns-654381707-oom3u 2/3 Running 4 10m

kube-system kube-proxy-fqlgx 1/1 Running 0 10m

kube-system kube-scheduler-ss-12.swiftstack.idv 1/1 Running 0 10m

kube-system weave-net-xbaiq 2/2 Running 0 5m

kubectl or kubectl help config or kubectl config view can be used kubectl control a remote cluster, we must specify the cluster endpoint to kubctl

# curl http://localhost:8080

for example:

$ curl http://localhost:8080

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1alpha1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1alpha1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}

$ kubectl config set-cluster local --server=http://127.0.0.1:8080 --insecure-skip-tls-verify=true

cluster "local" set.

# ls -la .kube/

total 16

drwxr-xr-x 3 root root 4096 Oct 25 06:39 .

drwx------ 4 root root 4096 Oct 24 23:37 ..

-rw------- 1 root root 191 Oct 25 06:39 config

StartFragment EndFragment

drwxr-xr-x 3 root root 4096 Oct 24 23:37 schema

# cat .kube/config

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: http://127.0.0.1:8080

name: local

contexts: []

current-context: ""

kind: Config

preferences: {}

StartFragment EndFragment

users: []

# kubectl config set-context local --cluster=local

StartFragment EndFragment

context "local" set.

# kubectl config use-context local

StartFragment EndFragment

switched to context "local".

# cat .kube/config

or

# kubectl config view

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: http://127.0.0.1:8080

name: local

contexts:

- context:

cluster: local

user: ""

name: local

current-context: local

kind: Config

preferences: {}

StartFragment EndFragment

users: []

# kubectl cluster-info

Kubernetes master is running at http://localhost:8080

kube-dns is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kube-dns

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# kubectl get nodes

NAME STATUS AGE

ubuntu Ready 5d

In sum, there is the quick script to make your kubernete all in one VM happen.

================for build VM================

$ vi Vagranfile ( just example )

Vagrant.configure(2) do |config|

config.vm.define "ss03" do |ss03|

ss03.vm.box = "ubuntu/xenial64"

ss03.vm.box_version = "20171011.0.0"

ss03.ssh.insert_key = 'false'

ss03.vm.hostname = "ss03.ss.idv"

ss03.vm.network "private_network", ip: "172.28.128.43", name: "vboxnet0"

ss03.vm.provider :virtualbox do |vb|

vb.memory = 4096

vb.cpus = 2

end

end

end

================for build VM================

$ vi Vagranfile ( just example )

Vagrant.configure(2) do |config|

config.vm.define "ss03" do |ss03|

ss03.vm.box = "ubuntu/xenial64"

ss03.vm.box_version = "20171011.0.0"

ss03.ssh.insert_key = 'false'

ss03.vm.hostname = "ss03.ss.idv"

ss03.vm.network "private_network", ip: "172.28.128.43", name: "vboxnet0"

ss03.vm.provider :virtualbox do |vb|

vb.memory = 4096

vb.cpus = 2

end

end

end

preparation

# sudo apt-get update && apt-get install -y apt-transport-https

# sudo curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

# sudo su -

#sudo cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

deb http://apt.kubernetes.io/ kubernetes-xenial main EOF# exit

=================for build k8s cluster================

# vi k8s.sh

sudo apt-get update -y

sudo apt-get install -y docker.io

sudo usermod -aG docker $(whoami)

sudo apt-get install -y kubelet kubeadm kubectl kubernetes-cni

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl apply -f https://git.io/weave-kube

kubectl config use-context kubernetes-admin@kubernetes

#options

#kubectl label nodes ss03 nodenum=ss03

=================for test yaml create pod================

# vi test.yaml

apiVersion : batch/v1

kind: Job

metadata:

name: demo1

spec:

activeDeadlineSeconds: 300

template:

metadata:

name: demo1

spec:

volumes:

- name: data

emptyDir:

medium: Memory

containers:

- name: demo1-1

image: swiftstack/alpine_bash_ffmpeg_swift

imagePullPolicy: IfNotPresent

command:

- "ping"

- "-c"

- "3"

- "cloud.swiftstack.com"

volumeMounts:

- mountPath: /data

name: data

nodeSelector:

nodenum: ss03

restartPolicy: Never

# kubectl create -f test.yaml

# kubectl describe job demo1 Name: demo1 Namespace: default Selector: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: <none> Parallelism: 1 Completions: 1 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Active Deadline Seconds: 300s Pods Statuses: 1 Running / 0 Succeeded / 0 Failed Pod Template: Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Containers: demo1-1: Image: swiftstack/alpine_bash_ffmpeg_swift Port: <none> Command: ping -c 3 cloud.swiftstack.com Environment: <none> Mounts: /data from data (rw) Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 16s job-controller Created pod: demo1-bkfld

# kubectl describe pod demo1-bkfld Name: demo1-bkfld Namespace: default Node: ss03/10.0.2.15 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"Job","namespace":"default","name":"demo1","uid":"e65339a7-d48c-11e7-bca2-0214683e8447","apiVersion":"batch... Status: Pending IP: Created By: Job/demo1 Controlled By: Job/demo1 Containers: demo1-1: Container ID: Image: swiftstack/alpine_bash_ffmpeg_swift Image ID: Port: <none> Command: ping -c 3 cloud.swiftstack.com State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: <none> Mounts: /data from data (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token-r82wn (ro) Conditions: Type Status Initialized True Ready False PodScheduled True Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory default-token-r82wn: Type: Secret (a volume populated by a Secret) SecretName: default-token-r82wn Optional: false QoS Class: BestEffort Node-Selectors: nodenum=ss03 Tolerations: node.alpha.kubernetes.io/notReady:NoExecute for 300s node.alpha.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 43s default-scheduler Successfully assigned demo1-bkfld to ss03 Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "data" Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "default-token-r82wn" Warning FailedCreatePodSandBox 43s kubelet, ss03 Failed create pod sandbox. Warning FailedSync 3s (x5 over 43s) kubelet, ss03 Error syncing pod Normal SandboxChanged 3s (x4 over 42s) kubelet, ss03 Pod sandbox changed, it will be killed and re-created.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ss03 Ready master 9m v1.8.4

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo1-bkfld 0/1 ContainerCreating 0 2m

$ kubectl get jobs

NAME DESIRED SUCCESSFUL AGE

demo1 1 0 2m

Subscribe to:

Comments (Atom)