As I mentioned above I'm using docker (http://chianingwang.blogspot.com/2015/02/docker-poc-lab-ubuntu-1404-via.html) as container platform, here are the steps for docker installation.

Docker Installation

for example, if you user is vagrant then you just try

$ sudo usermod -aG docker vagrant

if you are centOS, then try this https://docs.docker.com/engine/installation/linux/centos/

$ sudo yum update

$ sudo tee /etc/yum.repos.d/docker.repo <<-'EOF' [dockerrepo] name=Docker Repository baseurl=https://yum.dockerproject.org/repo/main/centos/7/ enabled=1 gpgcheck=1 gpgkey=https://yum.dockerproject.org/gpg EOF

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/centos/7/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

EOF

$ sudo yum install docker-engine

$ sudo systemctl enable docker.service

$ sudo systemctl start docker

Kubernetes Installation and Configuration

update sources.list.d for apt

- Kubeadm: admin tool to simplify starting K8S cluster

- "kubeadm". The kubeadmn tool simplifies the process of starting a Kubernetes cluster.

- Kubectl: command line

- Kubelet: node manager

- To use kubeadm we'll also need the kubectl cluster CLI tool and the kubelet node manager.

- Kubecni: cni-container network interface

- We'll also install Kubernetes CNI (Container Network Interface) support for multihost networking should we add additional nodes.

Install required kubernetes binaries

1. Install Kubernetes basics

2. Start the Kubenetes Master Component

3. Exploring the cluster

Enable Networking

Configure kubernetes cluster via kubectl

Configure kubernetes cluster via kubectlSetup Cluster's Context

$ wget -qO- https://get.docker.com/ | sh

###user to the “docker” group execute the following command:

$ sudo usermod -aG docker <user>

$ sudo reboot

###Verify Docker Installation

$ docker --version

Or

$ sudo systemctl status docker

Or

$ ls -l /var/run/docker.sock

Or

$ docker version

( this should show you both client and server )

Or

$ docker info

After docker is ready then we can start to install the kubernetes.

a. # curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add

b. # cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

> deb http://apt.kubernetes.io/ kubernetes-xenial main

> EOF

c. # apt-get update

PS Kubernetes 1.4 adds support for the alpha tool:

This is for ubuntu 16.4, for other OS like CentOS or HypriotOS can reference official Kubernetes like here.

Ubuntu

Ubuntu

# apt-get install -y kubelet kubeadm kubectl kubernetes-cni

CentOS

# setenforce 0

# yum install -y kubelet kubeadm kubectl kubernetes-cni

[vagrant@ss-12 ~]$ sudo su -

Last login: Fri Nov 18 19:11:47 UTC 2016 on pts/0

[root@ss-12 ~]# systemctl enable docker && systemctl start docker

[root@ss-12 ~]# systemctl enable kubelet && systemctl start kubelet

# kubeadm init

For example

$ sudo kubeadm init

Running pre-flight checks

<master/tokens> generated token: "c9e394.b98e34623bf468f4"

<master/pki> generated Certificate Authority key and certificate:

Issuer: CN=kubernetes | Subject: CN=kubernetes | CA: true

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2026-11-16 19:14:06 +0000 UTC

Public: /etc/kubernetes/pki/ca-pub.pem

Private: /etc/kubernetes/pki/ca-key.pem

Cert: /etc/kubernetes/pki/ca.pem

<master/pki> generated API Server key and certificate:

Issuer: CN=kubernetes | Subject: CN=kube-apiserver | CA: false

Not before: 2016-11-18 19:14:06 +0000 UTC Not After: 2017-11-18 19:14:06 +0000 UTC

Alternate Names: [10.0.2.15 10.96.0.1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local]

Public: /etc/kubernetes/pki/apiserver-pub.pem

Private: /etc/kubernetes/pki/apiserver-key.pem

Cert: /etc/kubernetes/pki/apiserver.pem

<master/pki> generated Service Account Signing keys:

Public: /etc/kubernetes/pki/sa-pub.pem

Private: /etc/kubernetes/pki/sa-key.pem

<master/pki> created keys and certificates in "/etc/kubernetes/pki"

<util/kubeconfig> created "/etc/kubernetes/kubelet.conf"

<util/kubeconfig> created "/etc/kubernetes/admin.conf"

<master/apiclient> created API client configuration

<master/apiclient> created API client, waiting for the control plane to become ready

<master/apiclient> all control plane components are healthy after 384.310122 seconds

<master/apiclient> waiting for at least one node to register and become ready

<master/apiclient> first node is ready after 3.004470 seconds

<master/apiclient> attempting a test deployment

<master/apiclient> test deployment succeeded

<master/discovery> created essential addon: kube-discovery, waiting for it to become ready

<master/discovery> kube-discovery is ready after 16.003111 seconds

<master/addons> created essential addon: kube-proxy

<master/addons> created essential addon: kube-dns

Kubernetes master initialised successfully!

You can now join any number of machines by running the following on each node:

# ps -fwwp `pgrep kubelet`

UID PID PPID C STIME TTY TIME CMD

root 817 1 3 11:25 ? 00:01:25 /usr/bin/kubelet --kubeconfig=/etc/kubernetes/kubelet.conf --require-kubeconfig=true --pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --cluster-dns=100.64.0.10 --cluster-domain=cluster.local --v=4

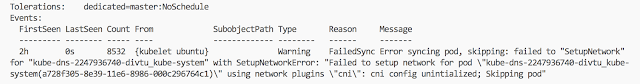

The very last line is the clue to our problem: "cni config unintialized". Our system is configured to use CNI but we have not installed a CNI networking system. We can easily add the Weave CNI VXLAN based container networking drivers using a POD spec from the Internet:

PS: if you try $ libel get pods --all-namespace, you should be able to see this error message at the end.

Thus, here we install the Weave CNI drivers.

You must install a pod network add-on so that your pods can communicate with each other.

It is necessary to do this before you try to deploy any applications to your cluster, and before

kube-dns will start up. Note also that kubeadm only supports CNI based networks and therefore kubenet based networks will not work.$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

node "ss02" untainted

# If you just want to run a All In On development environment then you just need to remove the "dedicated" taint from any node, includes master node. This mean the scheduler will be able to schedule pods everywhere.

$ kubectl taint nodes --all dedicated-

# kubectl apply -f https://git.io/weave-kube

daemonset "weave-net" created

This is for kube-system weave-net-2ifri

Kubernetes's components are leverage it's own container techniques. Thus we can check all the pods we created for running kubernetes

user@ubuntu:~$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-ubuntu 1/1 Running 1 5d

kube-system kube-apiserver-ubuntu 1/1 Running 3 5d

kube-system kube-controller-manager-ubuntu 1/1 Running 1 5d

kube-system kube-discovery-982812725-h210x 1/1 Running 1 5d

kube-system kube-dns-2247936740-swjx1 3/3 Running 3 5d

kube-system kube-proxy-amd64-s4hor 1/1 Running 1 5d

kube-system kube-scheduler-ubuntu 1/1 Running 1 5d

kube-system weave-net-2ifri

PS: The git.io site is a short url service from github. The weave-kube route points to a Kibernetes spec for a DaemonSet which is a resource that runs on every node in a cluster.

This Weave DaemonSet ensures that the weaveworks/weave-kube:1.7.1 image is running in a pod on all hosts, providing support for multi-host networking throughout the cluster.

This Weave DaemonSet ensures that the weaveworks/weave-kube:1.7.1 image is running in a pod on all hosts, providing support for multi-host networking throughout the cluster.

PS: Sometime kube-dns might fail because network hasn't configure properly. After you setup kubernetes network then everything will be coming back good slowly.

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dummy-2088944543-mn4d6 1/1 Running 0 10m

kube-system etcd-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-apiserver-ss-12.swiftstack.idv 1/1 Running 6 9m

kube-system kube-controller-manager-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-discovery-1150918428-pci3z 1/1 Running 0 10m

kube-system kube-dns-654381707-oom3u 2/3 Running 4 10m

kube-system kube-proxy-fqlgx 1/1 Running 0 10m

kube-system kube-scheduler-ss-12.swiftstack.idv 1/1 Running 0 10m

kube-system weave-net-xbaiq 2/2 Running 0 5m

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dummy-2088944543-mn4d6 1/1 Running 0 10m

kube-system etcd-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-apiserver-ss-12.swiftstack.idv 1/1 Running 6 9m

kube-system kube-controller-manager-ss-12.swiftstack.idv 1/1 Running 0 9m

kube-system kube-discovery-1150918428-pci3z 1/1 Running 0 10m

kube-system kube-dns-654381707-oom3u 2/3 Running 4 10m

kube-system kube-proxy-fqlgx 1/1 Running 0 10m

kube-system kube-scheduler-ss-12.swiftstack.idv 1/1 Running 0 10m

kube-system weave-net-xbaiq 2/2 Running 0 5m

kubectl or kubectl help config or kubectl config view can be used kubectl control a remote cluster, we must specify the cluster endpoint to kubctl

# curl http://localhost:8080

for example:

$ curl http://localhost:8080

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1alpha1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1alpha1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}

$ kubectl config set-cluster local --server=http://127.0.0.1:8080 --insecure-skip-tls-verify=true

cluster "local" set.

# ls -la .kube/

total 16

drwxr-xr-x 3 root root 4096 Oct 25 06:39 .

drwx------ 4 root root 4096 Oct 24 23:37 ..

-rw------- 1 root root 191 Oct 25 06:39 config

StartFragment EndFragment

drwxr-xr-x 3 root root 4096 Oct 24 23:37 schema

# cat .kube/config

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: http://127.0.0.1:8080

name: local

contexts: []

current-context: ""

kind: Config

preferences: {}

StartFragment EndFragment

users: []

# kubectl config set-context local --cluster=local

StartFragment EndFragment

context "local" set.

# kubectl config use-context local

StartFragment EndFragment

switched to context "local".

# cat .kube/config

or

# kubectl config view

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: http://127.0.0.1:8080

name: local

contexts:

- context:

cluster: local

user: ""

name: local

current-context: local

kind: Config

preferences: {}

StartFragment EndFragment

users: []

# kubectl cluster-info

Kubernetes master is running at http://localhost:8080

kube-dns is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kube-dns

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# kubectl get nodes

NAME STATUS AGE

ubuntu Ready 5d

In sum, there is the quick script to make your kubernete all in one VM happen.

================for build VM================

$ vi Vagranfile ( just example )

Vagrant.configure(2) do |config|

config.vm.define "ss03" do |ss03|

ss03.vm.box = "ubuntu/xenial64"

ss03.vm.box_version = "20171011.0.0"

ss03.ssh.insert_key = 'false'

ss03.vm.hostname = "ss03.ss.idv"

ss03.vm.network "private_network", ip: "172.28.128.43", name: "vboxnet0"

ss03.vm.provider :virtualbox do |vb|

vb.memory = 4096

vb.cpus = 2

end

end

end

================for build VM================

$ vi Vagranfile ( just example )

Vagrant.configure(2) do |config|

config.vm.define "ss03" do |ss03|

ss03.vm.box = "ubuntu/xenial64"

ss03.vm.box_version = "20171011.0.0"

ss03.ssh.insert_key = 'false'

ss03.vm.hostname = "ss03.ss.idv"

ss03.vm.network "private_network", ip: "172.28.128.43", name: "vboxnet0"

ss03.vm.provider :virtualbox do |vb|

vb.memory = 4096

vb.cpus = 2

end

end

end

preparation

# sudo apt-get update && apt-get install -y apt-transport-https

# sudo curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

# sudo su -

#sudo cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

deb http://apt.kubernetes.io/ kubernetes-xenial main EOF# exit

=================for build k8s cluster================

# vi k8s.sh

sudo apt-get update -y

sudo apt-get install -y docker.io

sudo usermod -aG docker $(whoami)

sudo apt-get install -y kubelet kubeadm kubectl kubernetes-cni

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl apply -f https://git.io/weave-kube

kubectl config use-context kubernetes-admin@kubernetes

#options

#kubectl label nodes ss03 nodenum=ss03

=================for test yaml create pod================

# vi test.yaml

apiVersion : batch/v1

kind: Job

metadata:

name: demo1

spec:

activeDeadlineSeconds: 300

template:

metadata:

name: demo1

spec:

volumes:

- name: data

emptyDir:

medium: Memory

containers:

- name: demo1-1

image: swiftstack/alpine_bash_ffmpeg_swift

imagePullPolicy: IfNotPresent

command:

- "ping"

- "-c"

- "3"

- "cloud.swiftstack.com"

volumeMounts:

- mountPath: /data

name: data

nodeSelector:

nodenum: ss03

restartPolicy: Never

# kubectl create -f test.yaml

# kubectl describe job demo1 Name: demo1 Namespace: default Selector: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: <none> Parallelism: 1 Completions: 1 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Active Deadline Seconds: 300s Pods Statuses: 1 Running / 0 Succeeded / 0 Failed Pod Template: Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Containers: demo1-1: Image: swiftstack/alpine_bash_ffmpeg_swift Port: <none> Command: ping -c 3 cloud.swiftstack.com Environment: <none> Mounts: /data from data (rw) Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 16s job-controller Created pod: demo1-bkfld

# kubectl describe pod demo1-bkfld Name: demo1-bkfld Namespace: default Node: ss03/10.0.2.15 Start Time: Tue, 28 Nov 2017 22:38:48 +0000 Labels: controller-uid=e65339a7-d48c-11e7-bca2-0214683e8447 job-name=demo1 Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"Job","namespace":"default","name":"demo1","uid":"e65339a7-d48c-11e7-bca2-0214683e8447","apiVersion":"batch... Status: Pending IP: Created By: Job/demo1 Controlled By: Job/demo1 Containers: demo1-1: Container ID: Image: swiftstack/alpine_bash_ffmpeg_swift Image ID: Port: <none> Command: ping -c 3 cloud.swiftstack.com State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: <none> Mounts: /data from data (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token-r82wn (ro) Conditions: Type Status Initialized True Ready False PodScheduled True Volumes: data: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: Memory default-token-r82wn: Type: Secret (a volume populated by a Secret) SecretName: default-token-r82wn Optional: false QoS Class: BestEffort Node-Selectors: nodenum=ss03 Tolerations: node.alpha.kubernetes.io/notReady:NoExecute for 300s node.alpha.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 43s default-scheduler Successfully assigned demo1-bkfld to ss03 Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "data" Normal SuccessfulMountVolume 43s kubelet, ss03 MountVolume.SetUp succeeded for volume "default-token-r82wn" Warning FailedCreatePodSandBox 43s kubelet, ss03 Failed create pod sandbox. Warning FailedSync 3s (x5 over 43s) kubelet, ss03 Error syncing pod Normal SandboxChanged 3s (x4 over 42s) kubelet, ss03 Pod sandbox changed, it will be killed and re-created.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ss03 Ready master 9m v1.8.4

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo1-bkfld 0/1 ContainerCreating 0 2m

$ kubectl get jobs

NAME DESIRED SUCCESSFUL AGE

demo1 1 0 2m

Very informative blog... I am looking for info on install Kubernetes and glad to find this blog. Thanks for sharing

ReplyDeletethanks , add some improvement for k8s 1.7 or 1.8

ReplyDelete